Qwen3¶

Qwen3 是阿里巴巴集团Qwen团队最新推出的开源大语言模型系列,作为Qwen系列的最新成员, 在代码、数学、通用能力等多个基准测试中与DeepSeek-R1、o1、o3-mini和Grok-3等等顶级模型相比展现出极具竞争力的表现。

Qwen3支持在 思考模式 (用于复杂逻辑推理、数学和编码)和 非思考模式 (用于高效通用对话)之间无缝切换 ,确保在各种场景下的最佳性能。 更多特点请查看 README.md 。

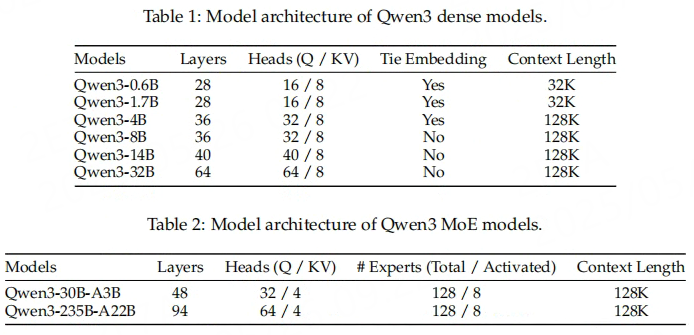

Qwen3提供了一系列密集型和混合专家(MoE)模型,包括Qwen3-235B-A22B、Qwen3-30B-A3B、Qwen3-32B、Qwen3-14B、Qwen3-8B、Qwen3-4B、Qwen3-1.7B和Qwen3-0.6B。 这些模型的权重可从 Hugging Face 或者 ModelScope 等平台获取。

接下来将使用rkllm本地部署运行Qwen3(0.6B和1.7B)。

Qwen3简单使用¶

模型转换¶

获取Qwen3-1.7B模型文件:

# 安装git-lfs

git lfs install

# sudo apt update && sudo apt install git-lfs

# 获取Qwen3-0.6B

git clone https://huggingface.co/Qwen/Qwen3-0.6B

# 从镜像网址获取(可选)

git clone https://hf-mirror.com/Qwen/Qwen3-0.6B

# 获取Qwen3-1.7B

git git clone https://huggingface.co/Qwen/Qwen3-1.7B

# 从镜像网址获取(可选)

git clone https://hf-mirror.com/Qwen/Qwen3-1.7B

使用export_rkllm.py导出rkllm模型,注意修改模型路径。

# 修改export_rkllm.py中的模型路径为前面拉取的Qwen3-1.7B路径

modelpath = '/path/to/work/Qwen3-1.7B'

# 测试鲁班猫4

(rlllm1.2.1b1) llh@llh:/xxx//export$ python export_rkllm.py

INFO: rkllm-toolkit version: 1.2.1b1

Loading checkpoint shards: 100%|████████████████████████████████████████████████████████████████████| 2/2 [00:01<00:00, 1.11it/s]

Building model: 100%|███████████████████████████████████████████████████████████████████████████████| 427/427 [00:32<00:00, 13.04it/s]

Optimizing model: 100%|████████████████████████████████████████████████████████████████████| 28/28 [00:15<00:00, 1.82it/s]

INFO: Setting chat_template to "<|im_start|>user\n[content]<|im_end|>\n<|im_start|>assistant\n"

INFO: Setting token_id of eos to 151645

INFO: Setting token_id of pad to 151643

INFO: Setting token_id of bos to 151643

INFO: Setting add_bos_token to False

Converting model: 100%|██████████████████████████████████████████████████████████████████████████| 311/311 [00:00<00:00, 2645899.68it/s]

INFO: Setting max_context_limit to 4096

INFO: Exporting the model, please wait ....

[=================================================>] 597/597 (100%)

INFO: Model has been saved to ./Qwen3-1.7B_W8A8_RK3588.rkllm!

导出Qwen3-1.7B_W8A8_RK3588.rkllm模型文件。

部署测试¶

获取测试例程:

# ,,请参考rkllm其他例程

# git clone xxx

编译测试例程:

cat@lubancat:~/xxx$ ./build-linux.sh

-- Configuring done

-- Generating done

-- Build files have been written to: /home/cat/xxx/build/build_linux_aarch64_Release

Scanning dependencies of target llm_demo

[ 33%] Building CXX object CMakeFiles/llm_demo.dir/src/test_demo.cpp.o

[ 66%] Linking CXX executable llm_demo

[100%] Built target llm_demo

[100%] Built target llm_demo

Linking CXX executable CMakeFiles/CMakeRelink.dir/llm_demo

Install the project...

-- Install configuration: "Release"

-- Installing: /home/cat/xxx/install/demo_Linux_aarch64/./llm_demo

-- Installing: /home/cat/xxx/demo_Linux_aarch64/lib/librkllmrt.so

将前面转换出的Qwen3-1.7B_W8A8_RK3588.rkllm文件复制到板卡上,然后执行命令:

# 切换到install/demo_Linux_aarch64目录下,将前面转换出的模型传输到板卡

cat@lubancat:~/.../install/demo_Linux_aarch64$ export LD_LIBRARY_PATH=./lib

# 查看性能

cat@lubancat:~/.../install/demo_Linux_aarch64$ export RKLLM_LOG_LEVEL=1

cat@lubancat:~/install/demo_Linux_aarch64$ ./llm_demo ~/Qwen3-1.7B_W8A8_RK3588.rkllm 1024 1024

rkllm init start

I rkllm: rkllm-runtime version: 1.2.1b1, rknpu driver version: 0.9.7, platform: RK3588

I rkllm: loading rkllm model from /home/cat/Qwen3-1.7B_W8A8_RK3588.rkllm

I rkllm: rkllm-toolkit version: 1.2.1b1, max_context_limit: 4096, npu_core_num: 3, target_platform: RK3588

I rkllm: Enabled cpus: [4, 5, 6, 7]

I rkllm: Enabled cpus num: 4

I rkllm: system_prompt:

I rkllm: prompt_prefix: <|im_start|>user\n

I rkllm: prompt_postfix: <|im_end|>\n<|im_start|>assistant\n

rkllm init success

user: Give me a short introduction to large language model.

robot: <think>

Okay, the user wants a short introduction to large language models. Let me start by defining what they are.

Large language models (LLMs) are AI systems trained on vast amounts of text data. They can understand and generate human-like text.

I should mention their key features: ability to handle various tasks like writing, coding, or answering questions.

Also, the training process involves deep learning techniques, maybe neural networks.

It's important to highlight their applications in different fields.

Wait, the user might be a student or someone new to AI. I need to keep it simple and not too technical.

Avoid jargon unless necessary. Maybe mention that they're used in chatbots, content creation, and more.

Also, note that they can adapt and learn over time with more data.

I should check if there's anything else the user might want. They asked for a short intro, so keep it concise but informative.

Make sure to explain why LLMs are significant in today's tech landscape. Maybe touch on their impact on industries and everyday use.

</think>

Large language models (LLMs) are advanced AI systems designed to understand, generate, and interact with human-like text.

Trained on vast amounts of data, they can perform tasks such as writing essays, coding, answering questions,

or generating creative content. LLMs rely on deep learning techniques, like neural networks,

to learn patterns in language and produce coherent responses. They are widely used in applications like chatbots,

content creation, and personalized recommendations, revolutionizing how humans interact with technology.

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Stage Total Time (ms) Tokens Time per Token (ms) Tokens per Second

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Init 1617.32 / / /

I rkllm: Prefill 221.62 18 12.31 81.22

I rkllm: Generate 39269.39 314 125.06 8.00

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Memory Usage (GB)

I rkllm: 1.87

I rkllm: --------------------------------------------------------------------------------------