4. RKNN Toolkit Lite2介绍¶

RKNN Toolkit Lite2 是Rockchip NPU平台的编程接口(Python),用于在板端部署RKNN模型。

本章将简单介绍下使用Toolkit Lite2使用流程和板卡上部署示例。

重要

测试环境:鲁班猫RK系列板卡镜像系统是Debian10/11, 教程编写时的RKNN-Toolkit2是1.5.0版本,LubanCat-0/1/2板卡npu驱动0.8.2,lubancat-4板卡npu驱动是0.8.8。

4.1. Toolkit Lite2安装¶

Toolkit-lite2适用于板卡端部署模型,更多依赖和使用信息请查看下 Rockchip_RKNPU_User_Guide_RKNN_SDK

板卡上获取RKNN Toolkit Lite2,可以直接从 官方github 或者从配套例程中获取:

# 从教程配套例程获取安装文件(或者从官方github获取最新版本的)

git clone https://gitee.com/LubanCat/lubancat_ai_manual_code.git

cd lubancat_ai_manual_code/dev_env/rknn_toolkit_lite2/

# 最新版本的请到 https://github.com/airockchip/rknn-toolkit2/tree/master/rknn-toolkit-lite2

# 切换到rknn-toolkit-lite2目录下

# 最新版本也支持pip命令安装

pip3 install rknn-toolkit-lite2

如果获取的是教程配套例程,其目录结构如下:

.

├── docs

│ ├── change_log.txt

│ ├── Rockchip_User_Guide_RKNN_Toolkit_Lite2_V1.5.0_CN.pdf

│ └── Rockchip_User_Guide_RKNN_Toolkit_Lite2_V1.5.0_EN.pdf

├── examples

│ ├── inference_with_lite

│ │ ├── resnet18_for_rk3562.rknn

│ │ ├── resnet18_for_rk3566_rk3568.rknn

│ │ ├── resnet18_for_rk3588.rknn

│ │ ├── space_shuttle_224.jpg

│ │ └── test.py

│ └── yolov5_inference

│ ├── bus.jpg

│ ├── test.py

│ ├── yolov5s_for_rk3562.rknn

│ ├── yolov5s_for_rk3566_rk3568.rknn

│ └── yolov5s_for_rk3588.rknn

└── packages

├── rknn_toolkit_lite2-1.5.0-cp310-cp310-linux_aarch64.whl

├── rknn_toolkit_lite2-1.5.0-cp37-cp37m-linux_aarch64.whl

├── rknn_toolkit_lite2-1.5.0-cp38-cp38-linux_aarch64.whl

├── rknn_toolkit_lite2-1.5.0-cp39-cp39-linux_aarch64.whl

└── rknn_toolkit_lite2_1.5.0_packages.md5sum

5 directories, 18 file

环境安装(以LubanCat 2,Debian10为例):

#安装python工具,安装相关依赖和软件包等

sudo apt update

sudo apt-get install python3-dev python3-pip gcc

sudo apt install -y python3-opencv python3-numpy python3-setuptools

Toolkit Lite2工具安装:

# 进入到rknn_toolkit_lite2目录下,

cd rknn_toolkit_lite2/

# 根据板卡系统的python版本,选择Debian10 ARM64 with python3.7.3的whl文件安装

# 例如 debian10 python3.7

pip3 install packages/rknn_toolkit_lite2-1.4.0-cp37-cp37m-linux_aarch64.whl

# 例如 debian11 python3.9

pip3 install packages/rknn_toolkit_lite2-1.5.0-cp39-cp39-linux_aarch64.whl

安装成功:

# 以debain11系统,python3.9为例:

cat@lubancat:~/rknn-toolkit2/rknn_toolkit_lite2$ python3

Python 3.9.2 (default, Feb 28 2021, 17:03:44)

[GCC 10.2.1 20210110] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from rknnlite.api import RKNNLite

>>>

4.2. Toolkit Lite2接口使用¶

Toolkit Lite2主要用于部署RKNN模型到板卡,可以通过RKNN Toolkit2工具将其他模型转换为RKNN模型。 Toolkit Lite2部署RKNN模型流程:

创建RKNNLite对象;

调用load_rknn接口导入RKNN模型,需要对应平台(rk356x/rk3588)的模型;

调用init_runtime接口初始化运行时环境;

调用inference接口对输入数据进行推理,返回推理结果,对结果进行处理;

推理完成后,调用release接口释放RKNNLite对象。

RKNN Toolkit Lite2详细的接口说明参考下RKNN-Toolkit2工程中rknn_toolkit_lite2/docs目录下的用户手册。

4.3. 板端推理测试¶

板端推理测试,会调用librknnrt.so库,该库是一个板端的runtime库。默认板卡镜像/usr/lib目录下有librknnrt.so库, 如果需要更新请从 rknpu2文件 中获取。

也可以从 云盘资料 (提取码hslu),下载Toolkit2工程文件,切换到rknpu2目录下获取:

librknnrt.so具体在 rknpu2 工程中runtime/目录下, Linux系统选择runtime/Linux/librknn_api/aarch64/目录下的librknnrt.so, 然后复制该库到板卡系统/usr/lib/目录下。

重要

注意RKNN-Toolkit2和RKNPU2 runtime库不同版本号可能会不兼容,可能会出现 Invalid RKNN model verslon 6 等错误,

请使用相同的版本。

4.3.1. resnet18推理测试¶

运行RKNN Toolkit Lite2的Demo,进入获取到的Toolkit Lite2目录,在../examples/inference_with_lite目录下执行命令:

# 从配套例程获取,或者从官方github获取

git clone https://gitee.com/LubanCat/lubancat_ai_manual_code.git

# git clone https://github.com/rockchip-linux/rknn-toolkit2.git

# 切换到rknn_toolkit_lite2/examples/inference_with_lit目录下

cd lubancat_ai_manual_code/dev_env/rknn_toolkit_lite2/examples/inference_with_lite

# 运行推理命令(下面是在lubancat-4板卡上测试)

cat@lubancat:~/rknn_toolkit_lite2/examples/inference_with_lite$ python3 test.py

--> Load RKNN model

done

--> Init runtime environment

I RKNN: [16:54:26.705] RKNN Runtime Information: librknnrt version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20)

I RKNN: [16:54:26.707] RKNN Driver Information: version: 0.8.8

I RKNN: [16:54:26.711] RKNN Model Information: version: 4, toolkit version: 1.5.0+1fa95b5c(compiler version: 1.5.0 (e6fe0c678@2023-05-25T16:15:03)), \

target: RKNPU v2, target platform: rk3588, framework name: PyTorch, framework layout: NCHW, model inference type: static_shape

done

--> Running model

resnet18

-----TOP 5-----

[812]: 0.9996760487556458

[404]: 0.00024927023332566023

[657]: 1.449744013370946e-05

[466 833]: 9.023910024552606e-06

[466 833]: 9.023910024552606e-06

done

测试例程,会根据板卡(上面测试是在LubanCat-4板卡),使用对应平台的RKNN模型,最终得到推理结果并打印。

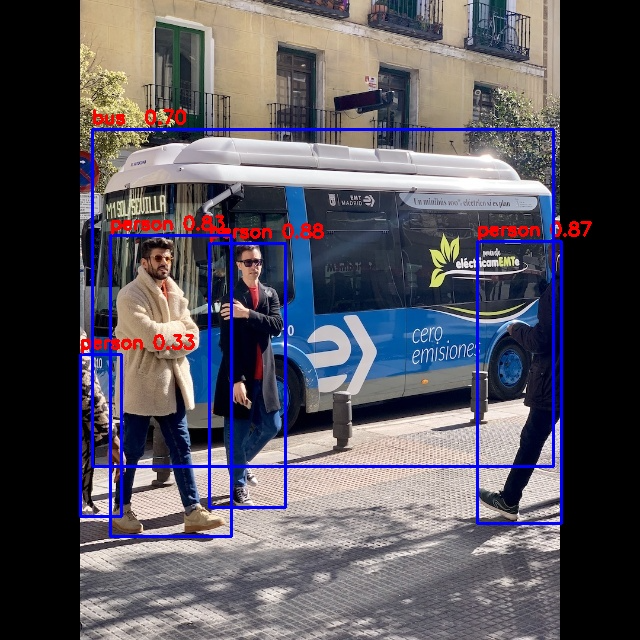

4.3.2. yolov5推理测试¶

下面我们简单测试部署下前面Toolkit Lite2章节,转换出的yolov5s.rknn模型,写一个test.py, 根据板卡加载模型,然后推理,对结果进行后处理等等(test.py源文件参考配套例程)。

# 切换到rknn_toolkit_lite2/examples/yolov5_inference目录下

cd lubancat_ai_manual_code/dev_env/rknn_toolkit_lite2/examples/yolov5_inference

cat@lubancat:~/rknn_toolkit_lite2/examples/yolov5_inference$ python test.py

--> Load RKNN model

done

--> Init runtime environment

I RKNN: [17:37:51.791] RKNN Runtime Information: librknnrt version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20)

I RKNN: [17:37:51.791] RKNN Driver Information: version: 0.8.8

I RKNN: [17:37:51.792] RKNN Model Information: version: 4, toolkit version: 1.5.0+1fa95b5c(compiler version: 1.5.0 (e6fe0c678@2023-05-25T16:15:03)), \

target: RKNPU v2, target platform: rk3588, framework name: ONNX, framework layout: NCHW, model inference type: static_shape

done

--> Running model

W RKNN: [17:37:51.896] Output(269): size_with_stride larger than model origin size, if need run OutputOperator in NPU, please call rknn_create_memory using size_with_stride.

W RKNN: [17:37:51.896] Output(271): size_with_stride larger than model origin size, if need run OutputOperator in NPU, please call rknn_create_memory using size_with_stride.

W RKNN: [17:37:51.897] Output(273): size_with_stride larger than model origin size, if need run OutputOperator in NPU, please call rknn_create_memory using size_with_stride.

done

class: person, score: 0.8831309676170349

box coordinate left,top,right,down: [209.6862335205078, 243.11955797672272, 285.13685607910156, 507.7035621404648]

class: person, score: 0.8669421076774597

box coordinate left,top,right,down: [477.6677174568176, 241.00597953796387, 561.1506419181824, 523.3208637237549]

class: person, score: 0.8258862495422363

box coordinate left,top,right,down: [110.24830067157745, 235.76190769672394, 231.76915538311005, 536.1012514829636]

class: person, score: 0.32633307576179504

box coordinate left,top,right,down: [80.75779604911804, 354.98213291168213, 121.49669003486633, 516.5315389633179]

class: bus , score: 0.7036669850349426

box coordinate left,top,right,down: [92.64832305908203, 129.30748665332794, 553.2792892456055, 466.27124869823456]

运行正常可以显示(或者查看输出图片out.jpg):

默认配套例程yolov5_inference/test.py是没有使用cv2.imshow()显示图片,可以自行查看out.jpg。