5. PP-YOLOE¶

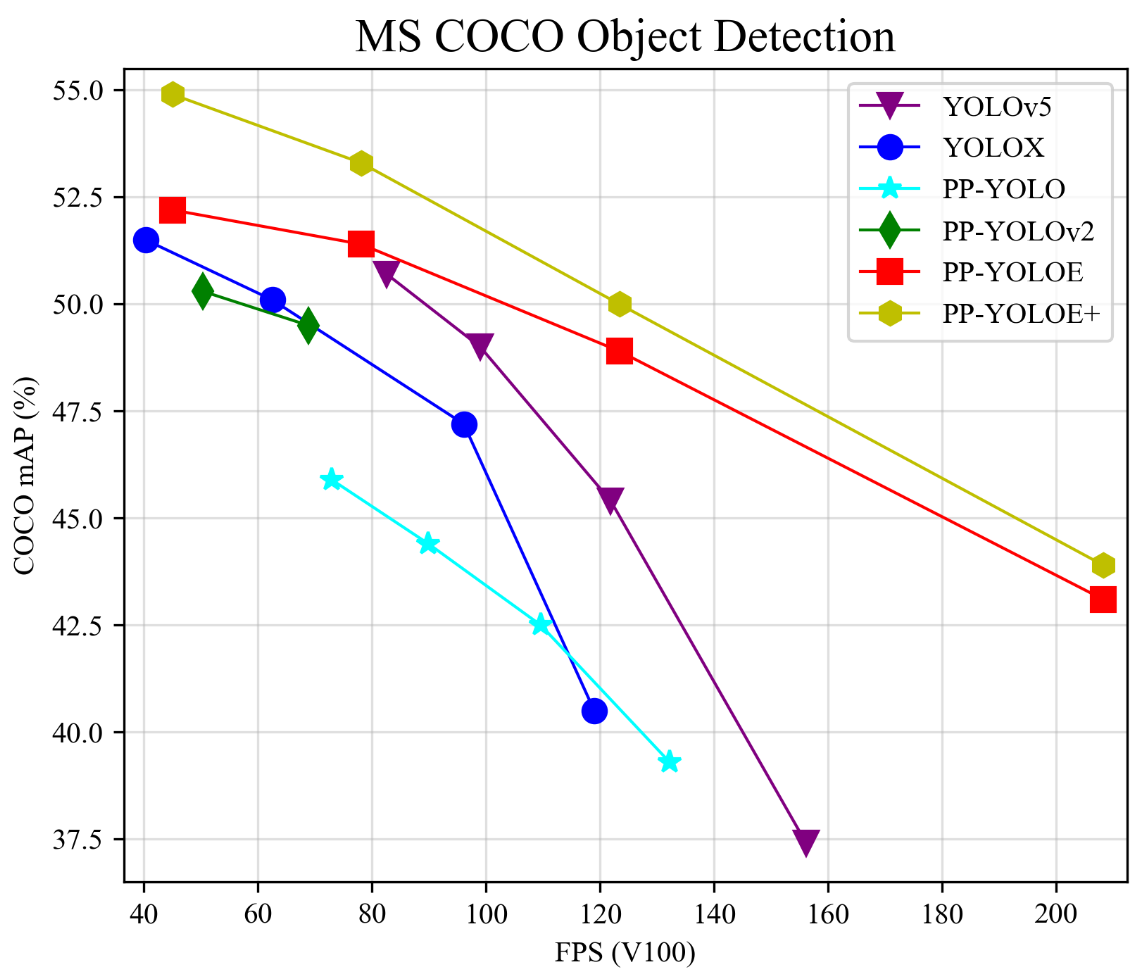

PP-YOLOE 是基于PP-YOLOv2的卓越的单阶段Anchor-free模型, 超越了多种流行的YOLO模型。PP-YOLOE有一系列的模型,即s/m/l/x,可以通过width multiplier和depth multiplier配置。 PP-YOLOE避免了使用诸如Deformable Convolution或者Matrix NMS之类的特殊算子,以使其能轻松地部署在多种多样的硬件上。 更多的信息参考下 论文 和 这里 。

本章将简单介绍下如何在鲁班猫RK系列板卡上部署PP-YOLOE模型。

提示

测试环境:鲁班猫RK板卡系统是Debian或者ubuntu,PC是WSL2(ubuntu20.04),rknn-Toolkit2版本1.5.0,PaddleYOLO或者PaddleDetection版本是release/2.6。

5.1. PP-YOLOE环境安装¶

这里测试,使用使用conda创建一个名为PaddleYOLO的虚拟环境,然后安装Paddle

# 使用conda创建一个名为PaddleYOLO的环境,并指定python版本

conda create -n pytorch python=3.8

# 安装Paddle,PaddleYOLO代码库推荐使用paddlepaddle-2.4.2以上的版本

# 教程测试使用conda 安装gpu版paddlepaddle 2.5

conda install paddlepaddle-gpu==2.5.1 cudatoolkit=11.6 -c https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/Paddle/ -c conda-forge

Paddle使用conda命令安装,根据自己环境具体命令参考下 官网

5.2. PP-YOLOE+模型简单使用¶

我们将使用官网提供的权重文件和coco数据集,简单推理测试下PP-YOLOE+模型,然后保存模型,进行模型转换等操作,然后部署到lubancat板卡。 如果需要使用自己数据集训练模型,参考下 这里 , 更多的说明参考 PaddleYOLO/docs 目录下的文档。

获取PaddleYOLO源码:

# 拉取PaddleYOLO,默认是使用2.6 release

git clone https://github.com/PaddlePaddle/PaddleYOLO.git

# 切换到PaddleYOLO目录,安装相关依赖库

cd PaddleYOLO

pip install -r requirements.txt # install

5.2.1. 模型推理¶

PP-YOLOE+是ppyoloe最新的模型,有一系列s/m/l/x模型, 获取权重(也可以从这里 获取 ):

# PP-YOLOE+_s

wget https://bj.bcebos.com/v1/paddledet/models/ppyoloe_plus_crn_s_80e_coco.pdparams

# PP-YOLOE+_m

wget https://bj.bcebos.com/v1/paddledet/models/ppyoloe_plus_crn_m_80e_coco.pdparams

使用tools/infer.py进行推理:

#export CUDA_VISIBLE_DEVICES=0

# 可能需要安装9.5.0版本的Pillow

pip install Pillow==9.5.0

# 使用tools/infer.py推理预测 (单张图/图片文件夹)

# -c 指定配置文件,configs/目录下的配置文件(测试使用ppyoloe_plus_crn_s_80e_coco.yml)也可以是自己添加的,

# -o 或者 --opt设置配置选项,这里设置了weights使用前面手动下载的权重,也可以直接设置weights=https://bj.bcebos.com/v1/paddledet/models/ppyoloe_plus_crn_s_80e_coco.pdparams

# --infer_dir指定推理的图片路径或者文件夹,--draw_threshold画框的阈值,默认0.5,图像尺寸是640*640

python tools/infer.py -c configs/ppyoloe/ppyoloe_plus_crn_s_80e_coco.yml \

-o weights=ppyoloe_plus_crn_s_80e_coco.pdparams --infer_img=demo/000000014439_640x640.jpg --draw_threshold=0.5

Warning: import ppdet from source directory without installing, run 'python setup.py install' to install ppdet firstly

W1016 16:13:59.105559 12482 gpu_resources.cc:119] Please NOTE: device: 0, GPU Compute Capability: 8.6, Driver API Version: 12.2, Runtime API Version: 11.6

W1016 16:13:59.106359 12482 gpu_resources.cc:149] device: 0, cuDNN Version: 8.9.

[10/16 16:14:01] ppdet.utils.checkpoint INFO: Finish loading model weights: ppyoloe_plus_crn_s_80e_coco.pdparams

[10/16 16:14:01] ppdet.data.source.category WARNING: anno_file 'dataset/coco/annotations/instances_val2017.json' is None or not set or not exist,

please recheck TrainDataset/EvalDataset/TestDataset.anno_path, otherwise the default categories will be used by metric_type.

[10/16 16:14:01] ppdet.data.source.category WARNING: metric_type: COCO, load default categories of COCO.

[10/16 16:14:01] ppdet.engine INFO: Test loader length is 1, test batch_size is 1.

[10/16 16:14:01] ppdet.engine INFO: Starting predicting ......

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:01<00:00, 1.86s/it]

[10/16 16:14:03] ppdet.engine INFO: Detection bbox results save in output/000000014439_640x640.jpg

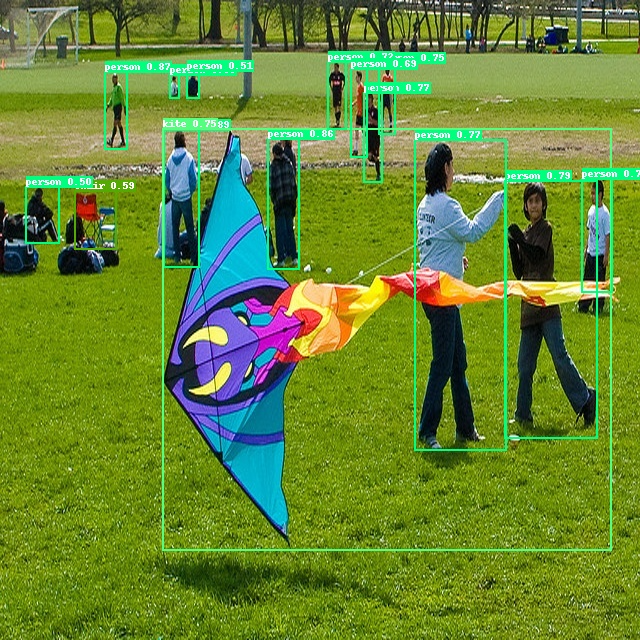

预测结果保存在output/000000014439_640x640.jpg:

5.3. 板卡部署模型¶

5.3.1. 针对RKNN优化¶

为了在 RKNPU 上获得更优的推理性能,我们调整了模型的输出结构,这些调整会影响到后处理的逻辑,主要包含以下内容

DFL 结构被移至后处理

新增额外输出,该输出为所有类别分数的累加,用于加速后处理的候选框过滤逻辑

具体请参考 rknn_model_zoo 。

我们在前面拉取的PaddleDetection源码或者PaddleYOLO源码基础上,简单修改下源码(版本是release/2.6):

if self.training:

yolo_losses = self.yolo_head(neck_feats, self.inputs)

return yolo_losses

else:

yolo_head_outs = self.yolo_head(neck_feats)

+ return yolo_head_outs

+ rk_out_list = []

for i, feat in enumerate(feats):

_, _, h, w = feat.shape

l = h * w

avg_feat = F.adaptive_avg_pool2d(feat, (1, 1))

cls_logit = self.pred_cls[i](self.stem_cls[i](feat, avg_feat) +

feat)

reg_dist = self.pred_reg[i](self.stem_reg[i](feat, avg_feat))

+ rk_out_list.append(reg_dist)

+ rk_out_list.append(F.sigmoid(cls_logit))

+ rk_out_list.append(paddle.clip(rk_out_list[-1].sum(1, keepdim=True), 0, 1))

reg_dist = reg_dist.reshape(

[-1, 4, self.reg_channels, l]).transpose([0, 2, 3, 1])

if self.use_shared_conv:

reg_dist = self.proj_conv(F.softmax(

reg_dist, axis=1)).squeeze(1)

else:

reg_dist = F.softmax(reg_dist, axis=1)

# cls and reg

cls_score = F.sigmoid(cls_logit)

cls_score_list.append(cls_score.reshape([-1, self.num_classes, l]))

reg_dist_list.append(reg_dist)

+ return rk_out_list

上面简单的修改只用于模型导出,训练模型时请注释掉,源码修改也可以直接打补丁(源码版本是release/2.5), 具体参考下 rknn_model_zoo 。

5.3.2. 导出ONNX模型¶

# 切换到PaddleDetection或者PaddleYOLO源码目录下,然后使用tools/export_model.py 导出paddle模型

cd PaddleDetection

# 其中 -c 是设置配置文件,configs/目录下的配置文件

# --output_dir 指定模型保存目录,默认是output_inference

# -o 或者 --opt设置配置选项,这里设置了weights使用前面手动下载的权重等等

python tools/export_model.py -c configs/ppyoloe/ppyoloe_plus_crn_s_80e_coco.yml \

-o weights=ppyoloe_plus_crn_s_80e_coco.pdparams \

exclude_nms=True exclude_post_process=True \

--output_dir inference_model

模型保存在inference_model/ppyoloe_plus_crn_s_80e_coco目录下:

ppyoloe_plus_crn_s_80e_coco

├── infer_cfg.yml # 模型配置文件信息

├── model.pdiparams # 静态图模型参数

├── model.pdiparams.info # 参数额外信息,一般无需关注

└── model.pdmodel # 静态图模型文件

提示

高版本的paddlepaddle不会生成静态图模型文件,尝试设置下环境变量export FLAGS_enable_pir_api=0,然后重新导出。

然后将paddle模型转换成ONNX模型:

# 转换模型

paddle2onnx --model_dir inference_model/ppyoloe_plus_crn_s_80e_coco \

--model_filename model.pdmodel \

--params_filename model.pdiparams \

--opset_version 11 \

--save_file ./inference_model/ppyoloe_plus_crn_s_80e_coco/ppyoloe_plus_crn_s_80e_coco.onnx

# 固定模型shape

python -m paddle2onnx.optimize --input_model inference_model/ppyoloe_plus_crn_s_80e_coco/ppyoloe_plus_crn_s_80e_coco.onnx \

--output_model inference_model/ppyoloe_plus_crn_s_80e_coco/ppyoloe_plus_crn_s_80e_coco.onnx \

--input_shape_dict "{'image':[1,3,640,640]}"

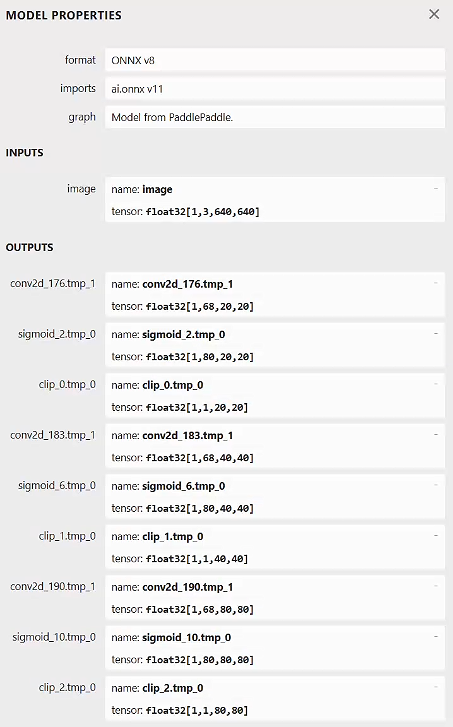

可以使用 Netron 查看下导出的onnx模型的输出和输出:

5.3.3. rknn-Toolkit2导出RKNN模型¶

接下来将onnx模型转换成rknn模型,可以使用 rknn_model_zoo 的程序,这里参考 rknn_model_zoo 编写下程序。

提示

测试时请确认rknn-Toolkit2的版本和运行推理程序时使用的librknnrt.so库版本一致。

将使用rknn-Toolkit2工具,转换onnx模型为rknn,并进行推理测试(参考配套例程):

if __name__ == '__main__':

# Create RKNN object

#rknn = RKNN(verbose=True)

rknn = RKNN()

# pre-process config,target_platform='rk3588'

print('--> Config model')

rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], target_platform='rk3588')

print('done')

# Load ONNX model

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL)

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=DATASET)

if ret != 0:

print('Build model failed!')

exit(ret)

print('done')

# Export RKNN model

print('--> Export rknn model')

ret = rknn.export_rknn(RKNN_MODEL)

if ret != 0:

print('Export rknn model failed!')

exit(ret)

print('done')

# Init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime()

# ret = rknn.init_runtime('rk3566')

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

# Set inputs

img_src = cv2.imread(IMG_PATH)

src_shape = img_src.shape[:2]

img, ratio, (dw, dh) = letter_box(img_src, IMG_SIZE)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

#img = cv2.resize(img_src, IMG_SIZE)

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[img])

print('done')

# post process

boxes, classes, scores = post_process(outputs)

img_p = img_src.copy()

if boxes is not None:

draw(img_p, get_real_box(src_shape, boxes, dw, dh, ratio), scores, classes)

cv2.imwrite("result.jpg", img_p)

转换模型,并进行推理:

# 运行test.py

python test.py

# 输出

W __init__: rknn-toolkit2 version: 1.5.0+1fa95b5c

--> Config model

done

--> Loading model

W load_onnx: It is recommended onnx opset 12, but your onnx model opset is 11!

Loading : 100%|████████████████████████████████████████████████| 411/411 [00:00<00:00, 71089.90it/s]

done

--> Building model

W build: found outlier value, this may affect quantization accuracy

const name abs_mean abs_std outlier value

conv2d_97.w_0 0.11 0.19 23.804

Analysing : 100%|███████████████████████████████████████████████| 232/232 [00:00<00:00, 8687.89it/s]

Quantizating : 100%|█████████████████████████████████████████████| 232/232 [00:00<00:00, 253.11it/s]

W build: The default input dtype of 'image' is changed from 'float32' to 'int8' in rknn model for performance!

Please take care of this change when deploy rknn model with Runtime API!

......省略

done

--> Export rknn model

done

--> Init runtime environment

W init_runtime: Target is None, use simulator!

done

--> Running model

W inference: The 'data_format' has not been set and defaults is nhwc!

Analysing : 100%|███████████████████████████████████████████████| 242/242 [00:00<00:00, 8721.02it/s]

Preparing : 100%|████████████████████████████████████████████████| 242/242 [00:00<00:00, 362.91it/s]

W inference: The dims of input(ndarray) shape (640, 640, 3) is wrong, expect dims is 4! Try expand dims to (1, 640, 640, 3)!

done

class: person, score: 0.8833610415458679

box coordinate left,top,right,down: [161, 82, 199, 168]

class: person, score: 0.8449540138244629

box coordinate left,top,right,down: [267, 81, 299, 169]

class: person, score: 0.8309878706932068

box coordinate left,top,right,down: [104, 46, 127, 93]

class: person, score: 0.7864068746566772

box coordinate left,top,right,down: [504, 110, 595, 283]

class: person, score: 0.7833229303359985

box coordinate left,top,right,down: [414, 89, 507, 284]

class: person, score: 0.7827470898628235

box coordinate left,top,right,down: [363, 62, 380, 114]

class: person, score: 0.7827470898628235

box coordinate left,top,right,down: [378, 40, 395, 83]

class: person, score: 0.7735382914543152

box coordinate left,top,right,down: [327, 39, 346, 80]

class: person, score: 0.7581902742385864

box coordinate left,top,right,down: [351, 44, 367, 97]

class: person, score: 0.7576653957366943

box coordinate left,top,right,down: [580, 113, 612, 195]

class: person, score: 0.666102409362793

box coordinate left,top,right,down: [169, 47, 179, 60]

class: person, score: 0.5893625020980835

box coordinate left,top,right,down: [186, 45, 200, 61]

class: person, score: 0.5003442168235779

box coordinate left,top,right,down: [24, 117, 60, 152]

class: kite, score: 0.687720537185669

box coordinate left,top,right,down: [163, 82, 558, 344]

class: chair, score: 0.6231280565261841

box coordinate left,top,right,down: [74, 121, 117, 158]

结果图片保存在当前目录下的result.jpg。

5.3.4. 板卡上部署测试¶

1、简单部署测试使用 rknn_model_zoo仓库提供的RKNN_C_demo,测试的是lubancat-4板卡,Debian11系统。

# 拉取rknn_model_zoo仓库源码,注意教程测试的rknn_model_zoo仓库版本是1.5.0,拉取仓库后切换v1.5.0到创建的新分支。

cat@lubancat:~/$ git clone https://github.com/airockchip/rknn_model_zoo.git

cat@lubancat:~/$ cd rknn_model_zoo

cat@lubancat:~/rknn_model_zoo$ git checkout -b test v1.5.0

# 切换到rknn_model_zoo/libs/rklibs目录,然后拉取相关库,包括rknpu2 和 librga

cat@lubancat:~/rknn_model_zoo$ cd ./libs/rklibs

cat@lubancat:~/rknn_model_zoo/libs/rklibs$ git clone https://github.com/rockchip-linux/rknpu2

cat@lubancat:~/rknn_model_zoo/libs/rklibs$ git clone https://github.com/airockchip/librga

# 然后切换到~/rknn_model_zoomodels/CV/object_detection/yolo/RKNN_C_demo/RKNN_toolkit_2/rknn_yolo_demo目录

cat@lubancat:~/$ cd rknn_model_zoo/models/CV/object_detection/yolo/RKNN_C_demo/RKNN_toolkit_2/rknn_yolo_demo

# 运行build-linux_RK3588.sh脚本,编译工程(使用系统默认的编译器),另外可以修改下include/yolo.h的CONF_THRESHOLD值

# 最后生成的文件安装在build/目录下

cat@lubancat:~/rknn_model_zoo/models/CV/object_detection/yolo/RKNN_C_demo/RKNN_toolkit_2/rknn_yolo_demo$ ./build-linux_RK3588.sh

-- The C compiler identification is GNU 10.2.1

-- The CXX compiler identification is GNU 10.2.1

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/aarch64-linux-gnu-gcc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/aarch64-linux-gnu-g++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done

-- Generating done

-- Build files have been written to: /home/cat/rknn_model_zoo/models/CV/object_detection/yolo/RKNN_C_demo/RKNN_toolkit_2/rknn_yolo_demo/build/build_linux_aarch64

Scanning dependencies of target rknn_yolo_demo_zero_copy

Scanning dependencies of target rknn_yolo_demo

[ 8%] Building CXX object CMakeFiles/rknn_yolo_demo_zero_copy.dir/src/zero_copy_demo.cc.o

[ 16%] Building CXX object CMakeFiles/rknn_yolo_demo.dir/src/main.cc.o

[ 33%] Building CXX object CMakeFiles/rknn_yolo_demo.dir/src/yolo.cc.o

[ 33%] Building CXX object CMakeFiles/rknn_yolo_demo_zero_copy.dir/src/yolo.cc.o

[ 41%] Building CXX object CMakeFiles/rknn_yolo_demo_zero_copy.dir/home/cat/rknn_model_zoo/models/CV/object_detection/yolo/RKNN_C_demo/yolo_utils/resize_function.cc.o

[ 50%] Building CXX object CMakeFiles/rknn_yolo_demo_zero_copy.dir/home/cat/rknn_model_zoo/libs/common/rkdemo_utils/rknn_demo_utils.cc.o

[ 58%] Building CXX object CMakeFiles/rknn_yolo_demo.dir/home/cat/rknn_model_zoo/models/CV/object_detection/yolo/RKNN_C_demo/yolo_utils/resize_function.cc.o

[ 66%] Building CXX object CMakeFiles/rknn_yolo_demo.dir/home/cat/rknn_model_zoo/libs/common/rkdemo_utils/rknn_demo_utils.cc.o

[ 75%] Linking CXX executable rknn_yolo_demo_zero_copy

[ 83%] Linking CXX executable rknn_yolo_demo

[ 91%] Built target rknn_yolo_demo_zero_copy

[100%] Built target rknn_yolo_demo

[ 50%] Built target rknn_yolo_demo_zero_copy

[100%] Built target rknn_yolo_demo

Install the project...

-- Install configuration: ""

## 后面省略.......

执行命令进行模型推理:

# 切换到install/rk3588/Linux/rknn_yolo_demo目录下,复制前面转换出的yolov8n_rknnopt_RK3588_i8.rknn模型文件到目录下,

# 然后执行命令:

cat@lubancat:xxx/install/rk3588/Linux/rknn_yolo_demo$ ./rknn_yolo_demo yolov8 q8 ./yolov8n_rknnopt_RK3588_i8.rknn ./model/bus640.jpg

_80e_coco.rknn ./model/test.jpg

MODEL HYPERPARAM:

Model type: ppyoloe_plus, 5

Anchor free

Post process with: q8

img_height: 640, img_width: 640, img_channel: 3

RUN MODEL ONE TIME FOR TIME DETAIL

inputs_set use: 0.731000 ms

rknn_run use: 52.935001 ms

outputs_get use: 2.613000 ms

loadLabelName ./model/coco_80_labels_list.txt

post_process load lable finish

cpu_post_process use: 2.582000 ms

DRAWING OBJECT

person @ (163 129 199 266) 0.879869

person @ (104 72 127 148) 0.862412

person @ (267 140 299 268) 0.851937

person @ (363 93 381 181) 0.785598

person @ (504 181 597 435) 0.774071

person @ (414 140 506 450) 0.749399

person @ (378 63 395 132) 0.739773

kite @ (162 129 607 549) 0.718560

person @ (327 61 347 127) 0.709077

person @ (581 179 612 285) 0.708784

person @ (350 69 366 156) 0.678381

chair @ (74 192 107 247) 0.586293

person @ (169 75 179 97) 0.580154

person @ (25 187 59 242) 0.516749

person @ (186 71 200 96) 0.497275

SAVE TO ./out.bmp

WITHOUT POST_PROCESS

full run for 10 loops, average time: 43.572899 ms

WITH POST_PROCESS

full run for 10 loops, average time: 55.550896 ms

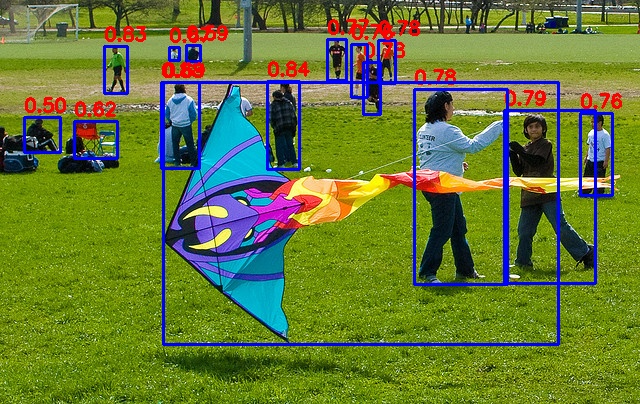

结果保存在当前目录下的out.bmp,结果显示: