5. RKNPU2¶

RKNPU2 为带有RKNPU的芯片平台提供的一套跨平台的编程接口(C/C++), 能够帮助用户部署使用RKNN Toolkit2导出的RKNN模型,加速AI应用的落地。

在使用RKNN API之前,首先需要使用RKNN-Toolkit2工具将用户的模型转换为RKNN模型( 注意RKNN-Toolkit2,RKNPU2不同版本号可能不兼容,请选择相同的版本号 ), 得到RKNN模型后,可以选择使用RKNN API接口在平台开发应用。

本章将简单介绍下RKNN API库的开发流程,以及使用示例。

重要

测试环境:鲁班猫RK系列板卡,镜像系统是Debian或者ubuntu,PC环境使用ubuntu20.04, 教程编写时的RKNN-Toolkit2是1.5.0版本,RKNPU2是1.5.0版本,LubanCat-0/1/2板卡npu驱动0.7.2,lubancat-4板卡npu驱动是0.8.8。

5.1. RKNN API使用¶

RKNN API库文件是librknnrt.so(对于RK356X/RK3588),头文件有rknn_api.h和rknn_matmul_api.h。 在linux系统使用该库,需要将库文件放到系统库搜索路径下,一般是放到/usr/lib下,头文件放到/usr/include。

鲁班板卡上提供的镜像(Debian10/11,ubuntu20.04/22.04)都已经安装该库,如果需要更新, 可以从 rknpu2工程 中的rknpu2/runtime/目录下获取最新runtime库。

RKNN API的调用有两种情况,分为通用API接口和零拷贝流程的API接口。

在RK356X/RK3588上这两组接口都可以使用, 当用户输入数据只有虚拟地址时,只能使用通用API接口;当用户输入数据有物理地址或fd时,两组接口都可以使用, 但是通用API和零拷贝API不能混合调用。

5.1.1. 通用API接口¶

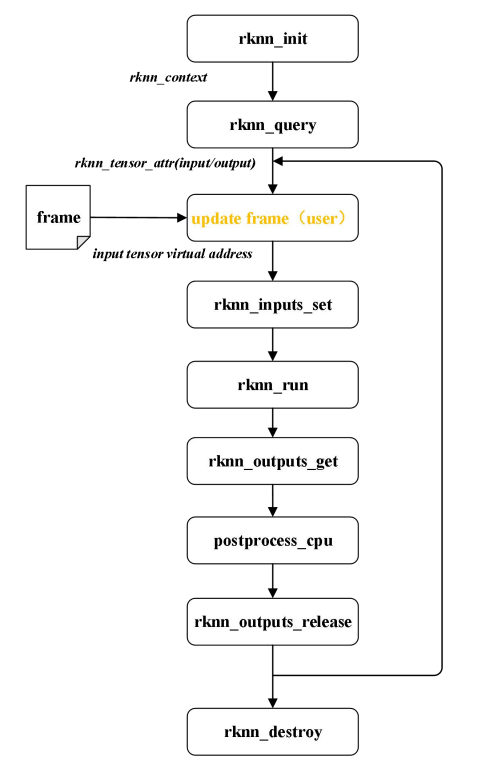

RKNN 通用API接口,对数据的量化,归一化和转换等等操作均在CPU,模型推理运行在NPU。其流程如下(图片参考rknpu api用户指导手册):

相关函数介绍:

rknn_init 函数将初始化传入的rknn_context对象,加载RKNN模型并根据flag和rknn_init_extend结构体执行特定的初始化行为。

rknn_query 函数能够查询获取到模型输入输出信息、逐层运行时间、模型推理的总时间、SDK 版本、内存占用信息、用户自定义字符串等信息

rknn_inputs_set 函数可以设置模型的输入数据,支持多个输入。每个输入是一个rknn_input 结构体对象,在传入之前用户需要设置输入数据

rknn_run 函数将执行一次模型推理调用,调用之前需要rknn_inputs_set函数

rknn_outputs_get 函数可以获取模型推理的输出数据,可以获取多个输出数据

rknn_outputs_release 函数将释放rknn_outputs_get函数得到的输出的相关资源

rknn_destroy 函数将释放rknn_context及其相关资源。

一次简单的通用API接口流调用流程: rknn_init 函数初始化rknn_context对象和加载RKNN模型,然后使用 rknn_query 函数查询模型的输入输出信息,给后面函数使用。 使用 rknn_inputs_set 设置模型输入,调用rknn_run函数执行推理,一次推理结束后使用 rknn_outputs_get 函数获取输出数据,用户可以进行相关后处理, 处理完成后使用函数 rknn_outputs_release 释放输出相关资源,更新帧数据重复前面rknn_run函数执行推理,全部推理完成后, 最后使用 rknn_destroy 释放rknn_context及其相关资源。

建议实践下后面rknn_yolov5_demo测试例程,查看源码调用流程,这样会更清楚通用API接口的调用流程。

5.1.2. 零拷贝API接口¶

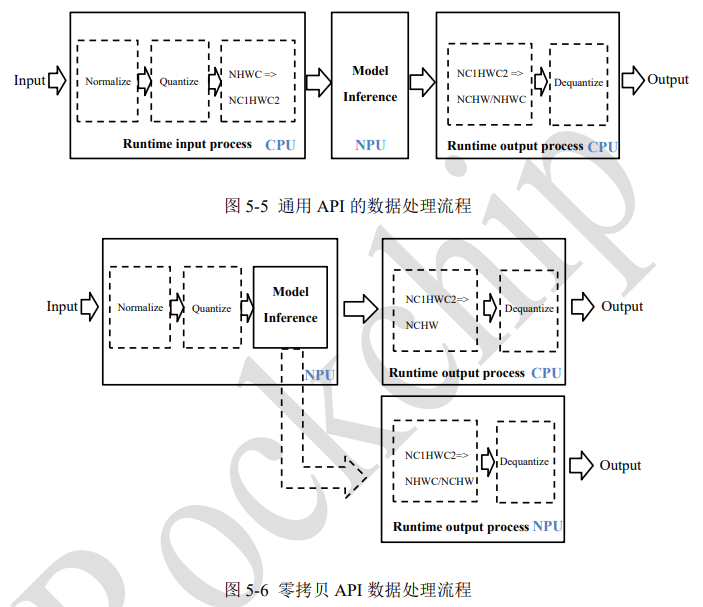

除了通用API接口,还有一组零拷贝API接口,它优化了对通用API的数据处理流程,数据的量化,归一化和转换等等操作都会在NPU,输出数据排布和反量化在CPU或者NPU。

两组API的主要区别在于,通用接口每次更新帧数据,需要将外部模块分配的数据拷贝到NPU运行时的输入内存,而 零拷贝流程的接口会直接使用预先分配的内存,这减少了内存拷贝的花销。零拷贝API接口调用流程和通用API接口类似, 只是设置模型的输入输出数据内存时,需要调用rknn_create_mem/_from_phys/from_fd、rknn_set_io_mem、rknn_destroy_mem等接口。

两个API接口的数据处理流程如下(参考RKNN_API指导手册):

而实际调用情况可能比较复杂,根据是否需要自行分配模型的模块内存(输入/输出/权重/中间结果)和内存表示方式(文件描述符/物理地址等)差异, 可以分为三种典型的零拷贝调用流程:

输入/输出内存由运行时分配

输入/输出内存由外部分配

输入/输出/权重/中间结果内存由外部分配

详细的调用流程参考下RKNPU的用户手册。

更多RKNN API平台支持情况和接口的详细说明参考下rknpu2工程文档 Rockchip_RKNPU_User_Guide_RKNN_API.pdf , 以及库头文件等等。

5.2. Linux平台测试¶

5.2.1. rknn_yolov5_demo例程测试¶

该例程使用通用API接口,具体测试步骤如下:

1、获取测试例程,使用rknpu2的rknn_yolov5_demo例程:

# 获取教程配套例程

git clone https://gitee.com/LubanCat/lubancat_ai_manual_code.git

cd dev_env/rknpu2/rknn_yolov5_demo

# 或者从rknpu2 github获取

# git clone https://github.com/rockchip-linux/rknpu2.git

# cd rknpu2-master/examples/rknn_yolov5_demo

2、 更新测试模型,可以使用前面RKNN Toolkit2转换出的yolov5 RKNN模型,也可以直接使用例程的模型。

3、 执行shell脚本,编译安装例程,可以选择在PC环境中交叉编译或者在板卡上编译。

在PC虚拟机上交叉编译,需要先获取交叉编译器,设置环境变量,然后编译安装,最后通过scp、rsync等方式复制install目录下的文件到板卡上。

# 安装默认交叉编译器

sudo apt update

sudo apt install gcc-aarch64-linux-gnu g++-aarch64-linux-gnu

# 或者使用下面的交叉编译器

# git clone https://github.com/LubanCat/gcc-buildroot-9.3.0-2020.03-x86_64_aarch64-rockchip-linux-gnu.git

# 切换到例程目录,如果使用从github获取的交叉编译器,需要修改下脚本文件中的GCC_COMPILER路径

cd lubancat_ai_manual_code/dev_env/rknpu2/rknn_yolov5_demo

# 根据板卡处理器,运行对应脚本,这里测试lubancat-4(处理器是rk3588s),运行build-linux_RK3588.sh:

# lubancat-0/1/2板卡,使用build-linux_RK3566_RK3568.sh

./build-linux_RK3588.sh

-- The C compiler identification is GNU 9.4.0

-- The CXX compiler identification is GNU 9.4.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/aarch64-linux-gnu-gcc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/aarch64-linux-gnu-g++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found OpenCV: /home/llh/rknn/test/3rdparty/opencv/opencv-linux-aarch64 (found version "3.4.5")

-- Configuring done (11.8s)

-- Generating done (0.2s)

-- Build files have been written to: /home/llh/rknn/test/rknn_yolov5_demo/build/build_linux_aarch64

[ 11%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/src/postprocess.cc.o

[ 22%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/utils/mpp_decoder.cpp.o

[ 33%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/src/main_video.cc.o

[ 44%] Building CXX object CMakeFiles/rknn_yolov5_demo.dir/src/main.cc.o

[ 66%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/utils/drawing.cpp.o

[ 77%] Building CXX object CMakeFiles/rknn_yolov5_demo.dir/src/postprocess.cc.o

[ 88%] Linking CXX executable rknn_yolov5_video_demo

[ 88%] Built target rknn_yolov5_video_demo

[100%] Linking CXX executable rknn_yolov5_demo

[100%] Built target rknn_yolov5_demo

[ 33%] Built target rknn_yolov5_demo

[100%] Built target rknn_yolov5_video_demo

Install the project...

# 省略......

/home/llh/rknn/test/rknn_yolov5_demo

# 编译完成后,会安装程序到install/目录下,复制该文件夹内容到板卡,这里使用rsync命令同步到板卡:

rsync -avz ./install cat@192.168.103.131:~/

在板卡上编译类似,根据不同的板卡运行脚本,rk356X使用脚本 build-linux_RK3566_RK3568.sh ,rk3588使用脚本 build-linux_RK3588.sh 。

# 根据板卡处理器,运行对应脚本,这里以LubanCat-4(处理器是rk3588s,板卡系统是Debian11)为例:

cat@lubancat:~/rknpu2/rknn_yolov5_demo$ ./build-linux_RK3588.sh

-- The C compiler identification is GNU 10.2.1

-- The CXX compiler identification is GNU 10.2.1

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/aarch64-linux-gnu-gcc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/aarch64-linux-gnu-g++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found OpenCV: /home/cat/rknpu2/test/3rdparty/opencv/opencv-linux-aarch64 (found version "3.4.5")

-- Configuring done

-- Generating done

-- Build files have been written to: /home/cat/rknpu2/test/rknn_yolov5_demo/build/build_linux_aarch64

Scanning dependencies of target rknn_yolov5_demo

Scanning dependencies of target rknn_yolov5_video_demo

[ 11%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/utils/mpp_decoder.cpp.o

[ 44%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/src/postprocess.cc.o

[ 44%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/src/main_video.cc.o

[ 44%] Building CXX object CMakeFiles/rknn_yolov5_demo.dir/src/main.cc.o

In file included from /home/cat/rknpu2/test/rknn_yolov5_demo/utils/mpp_decoder.cpp:4:

/home/cat/rknpu2/test/rknn_yolov5_demo/utils/mpp_decoder.h:58:18: warning: converting to non-pointer type ‘pthread_t’ {aka ‘long unsigned int’} from NULL [-Wconversion-null]

58 | pthread_t th=NULL;

| ^~~~

[ 55%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/utils/mpp_encoder.cpp.o

# 省略.....

[ 66%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/utils/drawing.cpp.o

[ 77%] Building CXX object CMakeFiles/rknn_yolov5_demo.dir/src/postprocess.cc.o

[ 88%] Linking CXX executable rknn_yolov5_video_demo

[ 88%] Built target rknn_yolov5_video_demo

[100%] Linking CXX executable rknn_yolov5_demo

[100%] Built target rknn_yolov5_demo

[ 66%] Built target rknn_yolov5_video_demo

[100%] Built target rknn_yolov5_demo

Install the project...

-- Install configuration: ""

# 省略.....

# 编译完成后,会安装程序到install/rknn_yolov5_demo_Linux/目录下,

# 会有两个可执行文件,一个是对图片识别,一个是对视频。

在板卡上编译时RKNN API库是使用3rdparty文件中的库文件,运行时可以使用3rdparty文件中的RKNN API库, 也可以使用鲁班猫系统默认安装的RKNN API库。

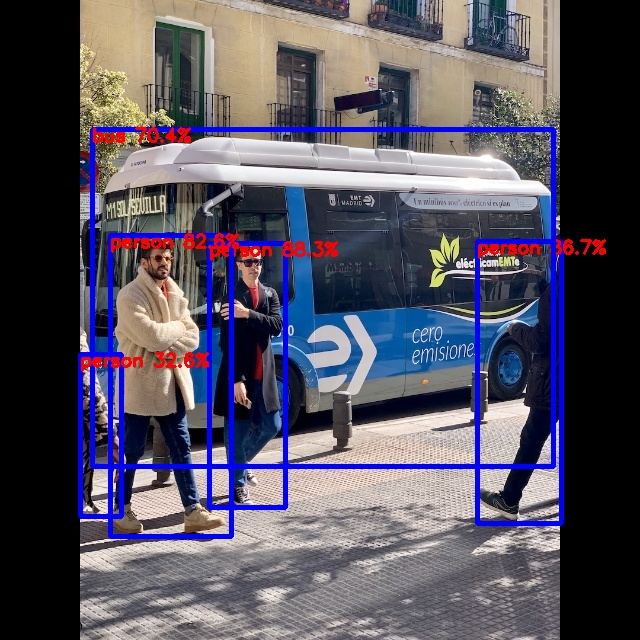

4、测试例程

不管是交叉编译还是板卡上编译安装的install目录,进入install/rknn_yolov5_demo_Linux/目录下, 对图片进行识别,执行rknn_yolov5_demo程序,可执行文件使用命令:

# 切换到下载安装目录,运行时使用例程安装的库

cat@lubancat:~/install/rknn_yolov5_demo_Linux$ export LD_LIBRARY_PATH=./lib

# 命令用法,根据板卡选择对应的模型

./rknn_yolov5_demo <rknn model> <jpg>

# 使用默认模型和图片,执行命令(测试lubuncat-4板卡,使用build-linux_RK3588.sh编译的程序):

# 如果是LubanCat-0/1/2板卡上,使用RK3566_RK3568目录下的模型

cat@lubancat:~/install/rknn_yolov5_demo_Linux$ ./rknn_yolov5_demo ./model/RK3588/yolov5s-640-640.rknn ./model/bus.jpg

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

Read ./model/bus.jpg ...

img width = 640, img height = 640

Loading mode...

sdk version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20) driver version: 0.8.8

model input num: 1, output num: 3

index=0, name=images, n_dims=4, dims=[1, 640, 640, 3], n_elems=1228800, size=1228800, w_stride = 640, size_with_stride=1228800, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003922

index=0, name=269, n_dims=4, dims=[1, 255, 80, 80], n_elems=1632000, size=1632000, w_stride = 0, size_with_stride=1638400, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=83, scale=0.093136

index=1, name=271, n_dims=4, dims=[1, 255, 40, 40], n_elems=408000, size=408000, w_stride = 0, size_with_stride=491520, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=48, scale=0.089854

index=2, name=273, n_dims=4, dims=[1, 255, 20, 20], n_elems=102000, size=102000, w_stride = 0, size_with_stride=163840, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=46, scale=0.078630

model is NHWC input fmt

model input height=640, width=640, channel=3

once run use 28.954000 ms

loadLabelName ./model/coco_80_labels_list.txt

person @ (209 243 285 507) 0.883131

person @ (477 241 561 523) 0.866942

person @ (110 235 231 536) 0.825886

bus @ (92 129 553 466) 0.703667

person @ (80 354 121 516) 0.326333

loop count = 10 , average run 27.475900 ms

# 最后输出out.jpg图片,可以在板卡系统或者传输到PC查看。

对本地视频进行识别,执行rknn_yolov5_video_demo程序:

# 命令用法

./rknn_yolov5_video_demo <rknn_model> <video_path> <video_type 264/265>

# 获取视频,然后转换为h264,h265码流视频,使用命令:

# 转换成h264码流视频

ffmpeg -i xxx.mp4 -vcodec h264 out.h264

# 转换成h265码流视频

ffmpeg -i xxx.mp4 -vcodec hevc out.hevc

# 使用默认模型和视频,执行命令(测试的是build-linux_RK3588.sh编译的程序,使用默认的test.h264视频):

cat@lubancat:~/install/rknn_yolov5_demo_Linux$ ./rknn_yolov5_video_demo ./model/RK3588/yolov5s-640-640.rknn ./model/test.h264 264

Loading mode...

sdk version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20) driver version: 0.8.8

model input num: 1, output num: 3

index=0, name=images, n_dims=4, dims=[1, 640, 640, 3], n_elems=1228800, size=1228800, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003922

index=0, name=269, n_dims=4, dims=[1, 255, 80, 80], n_elems=1632000, size=1632000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=83, scale=0.093136

index=1, name=271, n_dims=4, dims=[1, 255, 40, 40], n_elems=408000, size=408000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=48, scale=0.089854

index=2, name=273, n_dims=4, dims=[1, 255, 20, 20], n_elems=102000, size=102000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=46, scale=0.078630

model is NHWC input fmt

model input height=640, width=640, channel=3

app_ctx=0x7fce311b48 decoder=0x55994f00d0

read video size=4473796

0x5599a17320 encoder test start w 1920 h 1080 type 7

input image 1920x1080 stride 1920x1088 format=2560

resize with RGA!

rga_api version 1.9.1_[4]

once run use 41.432000 ms

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

loadLabelName ./model/coco_80_labels_list.txt

sheep @ (618 934 720 985) 0.620723

bird @ (282 997 339 1036) 0.378076

bird @ (0 891 171 1027) 0.250483

chn 0 size 412362 qp 17

input image 1920x1080 stride 1920x1088 format=2560

resize with RGA!

once run use 27.094000 ms

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

sheep @ (621 933 717 982) 0.741737

sheep @ (708 909 843 990) 0.605655

bird @ (0 885 168 1026) 0.407036

bird @ (276 993 339 1034) 0.391590

sheep @ (903 909 942 924) 0.293390

chn 0 size 42239 qp 21

input image 1920x1080 stride 1920x1088 format=2560

resize with RGA!

once run use 27.094000 ms

# 省略.......

reset decoder

waiting finish

# 最后会在当前目录下生成out.h264视频,可以在板卡系统上播放视频查看结果。

对rtsp视频流测试,也是使用rknn_yolov5_video_demo程序:

# 命令用法

./rknn_yolov5_video_demo model/<TARGET_PLATFORM>/yolov5s-640-640.rknn <RTSP_URL> 265

# 测试在本地使用ffmpeg推流rtsp,先启动一个rtsp服务器,该服务后端运行

# 使用MediaServer(从配套例程dev_env/rknpu2/examples/3rdparty/zlmediakit目录下获取)

./MediaServer &

# ffmpeg推流测试,输出到rtsp://127.0.0.1/live/stream,使用测试视频test.mp4

ffmpeg -re -i "./model/test.mp4" -vcodec h264 -f rtsp -rtsp_transport tcp rtsp://127.0.0.1/live/stream

# 执行命令,测试使用前面本地推流的视频rtsp://127.0.0.1:8554/stream,仅仅是测试使用

cat@lubancat:~/install/rknn_yolov5_demo_Linux$ ./rknn_yolov5_video_demo ./model/RK3588/yolov5s-640-640.rknn rtsp://127.0.0.1/live/stream 264

Loading mode...

sdk version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20) driver version: 0.8.8

model input num: 1, output num: 3

index=0, name=images, n_dims=4, dims=[1, 640, 640, 3], n_elems=1228800, size=1228800, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003922

index=0, name=269, n_dims=4, dims=[1, 255, 80, 80], n_elems=1632000, size=1632000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=83, scale=0.093136

index=1, name=271, n_dims=4, dims=[1, 255, 40, 40], n_elems=408000, size=408000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=48, scale=0.089854

index=2, name=273, n_dims=4, dims=[1, 255, 20, 20], n_elems=102000, size=102000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=46, scale=0.078630

model is NHWC input fmt

model input height=640, width=640, channel=3

app_ctx=0x7fccb0ba78 decoder=0x55a2e960d0

2023-08-14 09:51:04.516 D rknn_yolov5_video_demo[5094-stamp thread] util.cpp:366 operator() | Stamp thread started

2023-08-14 09:51:04.520 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:466 EventPollerPool | EventPoller created size: 8

enter any key to exit

2023-08-14 09:51:04.521 D rknn_yolov5_video_demo[5094-event poller 0] RtspPlayer.cpp:93 play | rtsp://127.0.0.1/live/stream null null 0

2023-08-14 09:51:04.523 D rknn_yolov5_video_demo[5094-event poller 0] RtspPlayer.cpp:488 handleResPAUSE | seekTo(ms):0

2023-08-14 09:51:04.523 W rknn_yolov5_video_demo[5094-event poller 0] RtspPlayer.cpp:689 onPlayResult_l | 0 rtsp play success

2023-08-14 09:51:04.527 D rknn_yolov5_video_demo[5094-event poller 0] MediaSink.cpp:137 emitAllTrackReady | all track ready use 4ms

play success!2023-08-14 09:51:04.527 I rknn_yolov5_video_demo[5094-event poller 0] main_video.cc:462 on_mk_play_event_func | got video track: H264

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

on_track_frame_out ctx=0x7fccb0ba78

decoder=0x55a2e960d0

0x7f70074ca0 encoder test start w 1920 h 1080 type 7

input image 1920x1080 stride 1920x1088 format=2560

resize with RGA!

rga_api version 1.9.1_[4]

once run use 23.229000 ms

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

loadLabelName ./model/coco_80_labels_list.txt

sheep @ (618 934 720 985) 0.620723

bird @ (282 997 339 1036) 0.378076

bird @ (0 891 171 1027) 0.250483

chn 0 size 412362 qp 17

input image 1920x1080 stride 1920x1088 format=2560

resize with RGA!

once run use 22.650000 ms

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

# 省略.......

input image 1920x1080 stride 1920x1088 format=2560

resize with RGA!

once run use 24.076000 ms

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

bird @ (399 178 1371 931) 0.555864

chn 0 size 11690 qp 11

decoder run use 257.573000 ms

2023-08-14 09:51:20.579 W rknn_yolov5_video_demo[5094-event poller 0] RtspPlayer.cpp:689 onPlayResult_l | 2 receive rtp timeout

waiting finish

2023-08-14 09:51:23.070 D rknn_yolov5_video_demo[5094-rknn_yolov5_vid] RtspPlayerImp.h:31 ~RtspPlayerImp |

2023-08-14 09:51:23.070 D rknn_yolov5_video_demo[5094-rknn_yolov5_vid] RtspPlayer.cpp:41 ~RtspPlayer |

2023-08-14 09:51:26.105 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a2e3f130

2023-08-14 09:51:26.106 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33baa40

2023-08-14 09:51:26.106 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33bafd0

2023-08-14 09:51:26.107 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33bb540

2023-08-14 09:51:26.107 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33bbad0

2023-08-14 09:51:26.108 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33bc060

2023-08-14 09:51:26.108 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33bc5f0

2023-08-14 09:51:26.108 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] EventPoller.cpp:98 ~EventPoller | 0x55a33bcbd0

2023-08-14 09:51:26.108 I rknn_yolov5_video_demo[5094-rknn_yolov5_vid] logger.cpp:86 ~Logger |

# 按任意键退出,最后会在当前目录下生成out.h264视频,可以在板卡系统上播放视频查看结果。

5.2.2. 零拷贝API接口例程¶

测试例程可以从 RKNPU工程文件 中examples/rknn_api_demo_Linux目录获取,或者从教程代码例程获取。 该例程使用MobileNet-v1进行图像识别,在linux平台上测试有两个示例,区别是一个使用RGA,一个不使用RGA。

1、获取例程

# 获取教程配套例程

git clone https://gitee.com/LubanCat/lubancat_ai_manual_code.git

# 或者从rknpu2 github获取

# git clone https://github.com/airockchip/rknn-toolkit2

# cd rknn-toolkit2/rknpu2/examples/rknn_api_demo/src/rknn_create_mem_demo.cpp

# 切换到例程目录下

cd rknpu2/rknn_api_demo

2、编译例程,演示直接在板卡上编译(LubanCat-4):

cat@lubancat:~/rknpu2/rknn_api_demo$ ./build-linux_RK3588.sh

-- The C compiler identification is GNU 10.2.1

-- The CXX compiler identification is GNU 10.2.1

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/aarch64-linux-gnu-gcc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/aarch64-linux-gnu-g++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done

-- Generating done

-- Build files have been written to: /home/cat/rknpu_test/rknn_api_demo/build/build_linux_aarch64

Scanning dependencies of target rknn_create_mem_with_rga_demo

Scanning dependencies of target rknn_create_mem_demo

[ 50%] Building CXX object CMakeFiles/rknn_create_mem_demo.dir/src/rknn_create_mem_demo.cpp.o

[ 50%] Building CXX object CMakeFiles/rknn_create_mem_with_rga_demo.dir/src/rknn_create_mem_with_rga_demo.cpp.o

[ 75%] Linking CXX executable rknn_create_mem_demo

[100%] Linking CXX executable rknn_create_mem_with_rga_demo

[100%] Built target rknn_create_mem_demo

[100%] Built target rknn_create_mem_with_rga_demo

[ 50%] Built target rknn_create_mem_with_rga_demo

[100%] Built target rknn_create_mem_demo

Install the project...

-- Install configuration: ""

# 省略....

编译完成后,会安装程序在当前目录install/下。

3、测试例程

# 测试使用当前目录install/rknn_api_demo_Linux下的rknn_create_mem_demo

cat@lubancat:~/rknpu2/rknn_api_demo/install/rknn_api_demo_Linux$ ./rknn_create_mem_demo model/RK3588/mobilenet_v1.rknn model/dog_224x224.jpg

rknn_api/rknnrt version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20), driver version: 0.8.8

model input num: 1, output num: 1

input tensors:

index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007812

output tensors:

index=0, name=MobilenetV1/Predictions/Reshape_1, n_dims=2, dims=[1, 1001, 0, 0], n_elems=1001, size=1001, fmt=UNDEFINED, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003906

custom string:

Begin perf ...

0: Elapse Time = 2.89ms, FPS = 346.14

---- Top5 ----

0.984375 - 156

0.007812 - 155

0.003906 - 205

0.000000 - 0

0.000000 - 1

# 测试使用当前目录install/rknn_api_demo_Linux下的rknn_create_mem_with_rga_demo

cat@lubancat:~/rknpu_test/rknn_api_demo/install/rknn_api_demo_Linux$ ./rknn_create_mem_with_rga_demo model/RK3588/mobilenet_v1.rknn model/dog_224x224.jpg

rknn_api/rknnrt version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20), driver version: 0.8.8

model input num: 1, output num: 1

input tensors:

index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007812

output tensors:

index=0, name=MobilenetV1/Predictions/Reshape_1, n_dims=2, dims=[1, 1001, 0, 0], n_elems=1001, size=1001, fmt=UNDEFINED, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003906

custom string:

rga_api version 1.9.1_[4]

Begin perf ...

0: Elapse Time = 2.87ms, FPS = 349.04

---- Top5 ----

0.984375 - 156

0.007812 - 155

0.003906 - 205

0.000000 - 0

0.000000 - 1

最终输出概率最大的5个类别及其对应概率,可以看出概率最大的是类别156。

4、零拷贝API接口例程源码,以不带rga为例(rknn_create_mem_demo.cpp),下面只列出了主函数部分:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 | int main(int argc, char* argv[])

{

// 省略了部分程序...

rknn_context ctx = 0;

// 调用rknn_init接口初始化rknn_context,加载RKNN模型

int model_len = 0;

unsigned char* model = load_model(model_path, &model_len);

int ret = rknn_init(&ctx, model, model_len, 0, NULL);

if (ret < 0) {

printf("rknn_init fail! ret=%d\n", ret);

return -1;

}

// 调用rknn_query接口,查询查询获取SDK 版本、模型输入输出信息

rknn_sdk_version sdk_ver;

ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &sdk_ver, sizeof(sdk_ver));

if (ret != RKNN_SUCC) {

printf("rknn_query fail! ret=%d\n", ret);

return -1;

}

printf("rknn_api/rknnrt version: %s, driver version: %s\n", sdk_ver.api_version, sdk_ver.drv_version);

// Get Model Input Output Info

rknn_input_output_num io_num;

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (ret != RKNN_SUCC) {

printf("rknn_query fail! ret=%d\n", ret);

return -1;

}

printf("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output);

// 调用rknn_query接口,查询原始的输入tensor属性,输出的tensor属性,放到对应rknn_tensor_attr结构体对象

printf("input tensors:\n");

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, io_num.n_input * sizeof(rknn_tensor_attr));

for (uint32_t i = 0; i < io_num.n_input; i++) {

input_attrs[i].index = i;

// query info

ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]), sizeof(rknn_tensor_attr));

if (ret < 0) {

printf("rknn_init error! ret=%d\n", ret);

return -1;

}

dump_tensor_attr(&input_attrs[i]);

}

printf("output tensors:\n");

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, io_num.n_output * sizeof(rknn_tensor_attr));

for (uint32_t i = 0; i < io_num.n_output; i++) {

output_attrs[i].index = i;

// query info

ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]), sizeof(rknn_tensor_attr));

if (ret != RKNN_SUCC) {

printf("rknn_query fail! ret=%d\n", ret);

return -1;

}

dump_tensor_attr(&output_attrs[i]);

}

// 调用rknn_query接口,查询 RKNN 模型里面的用户自定义字符串信息

rknn_custom_string custom_string;

ret = rknn_query(ctx, RKNN_QUERY_CUSTOM_STRING, &custom_string, sizeof(custom_string));

if (ret != RKNN_SUCC) {

printf("rknn_query fail! ret=%d\n", ret);

return -1;

}

printf("custom string: %s\n", custom_string.string);

unsigned char* input_data = NULL;

rknn_tensor_type input_type = RKNN_TENSOR_UINT8;

rknn_tensor_format input_layout = RKNN_TENSOR_NHWC;

// 加载图片,输入数据

input_data = load_image(input_path, &input_attrs[0]);

if (!input_data) {

return -1;

}

// 使用rknn_create_mem分配内存,存放输入tensor

rknn_tensor_mem* input_mems[1];

// default input type is int8 (normalize and quantize need compute in outside)

// if set uint8, will fuse normalize and quantize to npu

input_attrs[0].type = input_type;

// default fmt is NHWC, npu only support NHWC in zero copy mode

input_attrs[0].fmt = input_layout;

input_mems[0] = rknn_create_mem(ctx, input_attrs[0].size_with_stride);

// Copy input data to input tensor memory

int width = input_attrs[0].dims[2];

int stride = input_attrs[0].w_stride;

if (width == stride) {

memcpy(input_mems[0]->virt_addr, input_data, width * input_attrs[0].dims[1] * input_attrs[0].dims[3]);

} else {

int height = input_attrs[0].dims[1];

int channel = input_attrs[0].dims[3];

// copy from src to dst with stride

uint8_t* src_ptr = input_data;

uint8_t* dst_ptr = (uint8_t*)input_mems[0]->virt_addr;

// width-channel elements

int src_wc_elems = width * channel;

int dst_wc_elems = stride * channel;

for (int h = 0; h < height; ++h) {

memcpy(dst_ptr, src_ptr, src_wc_elems);

src_ptr += src_wc_elems;

dst_ptr += dst_wc_elems;

}

}

// 使用rknn_create_mem函数分配内存,存放输出tensor

rknn_tensor_mem* output_mems[io_num.n_output];

for (uint32_t i = 0; i < io_num.n_output; ++i) {

// default output type is depend on model, this require float32 to compute top5

// allocate float32 output tensor

int output_size = output_attrs[i].n_elems * sizeof(float);

output_mems[i] = rknn_create_mem(ctx, output_size);

}

// rknn_set_io_mem函数设置输入tensor内存,NPU使用

ret = rknn_set_io_mem(ctx, input_mems[0], &input_attrs[0]);

if (ret < 0) {

printf("rknn_set_io_mem fail! ret=%d\n", ret);

return -1;

}

// rknn_set_io_mem函数设置输出tensor内存,NPU使用

for (uint32_t i = 0; i < io_num.n_output; ++i) {

// default output type is depend on model, this require float32 to compute top5

output_attrs[i].type = RKNN_TENSOR_FLOAT32;

// set output memory and attribute

ret = rknn_set_io_mem(ctx, output_mems[i], &output_attrs[i]);

if (ret < 0) {

printf("rknn_set_io_mem fail! ret=%d\n", ret);

return -1;

}

}

// 调用rknn_run接口执行一次模型推理

printf("Begin perf ...\n");

for (int i = 0; i < loop_count; ++i) {

int64_t start_us = getCurrentTimeUs();

ret = rknn_run(ctx, NULL);

int64_t elapse_us = getCurrentTimeUs() - start_us;

if (ret < 0) {

printf("rknn run error %d\n", ret);

return -1;

}

printf("%4d: Elapse Time = %.2fms, FPS = %.2f\n", i, elapse_us / 1000.f, 1000.f * 1000.f / elapse_us);

}

// 对推理结果处理,获取概率最大的5个类别及其对应概率,并打印输出

uint32_t topNum = 5;

for (uint32_t i = 0; i < io_num.n_output; i++) {

uint32_t MaxClass[topNum];

float fMaxProb[topNum];

float* buffer = (float*)output_mems[i]->virt_addr;

uint32_t sz = output_attrs[i].n_elems;

int top_count = sz > topNum ? topNum : sz;

rknn_GetTopN(buffer, fMaxProb, MaxClass, sz, topNum);

printf("---- Top%d ----\n", top_count);

for (int j = 0; j < top_count; j++) {

printf("%8.6f - %d\n", fMaxProb[j], MaxClass[j]);

}

}

// 使用rknn_destroy_mem函数销毁输入输出的tensor内存

rknn_destroy_mem(ctx, input_mems[0]);

for (uint32_t i = 0; i < io_num.n_output; ++i) {

rknn_destroy_mem(ctx, output_mems[i]);

}

// 释放rknn_context,以及其相关资源

rknn_destroy(ctx);

if (input_data != nullptr) {

free(input_data);

}

if (model != nullptr) {

free(model);

}

return 0;

}

|

5.3. Android平台测试¶

在安卓平台上运行RKNN模型也是类似的,下面将参考RKNPU2例程,进行简单测试。

重要

鲁班猫烧录安卓镜像请参考下 安卓教程 , 教程测试使用lubancat-4,android 12镜像。

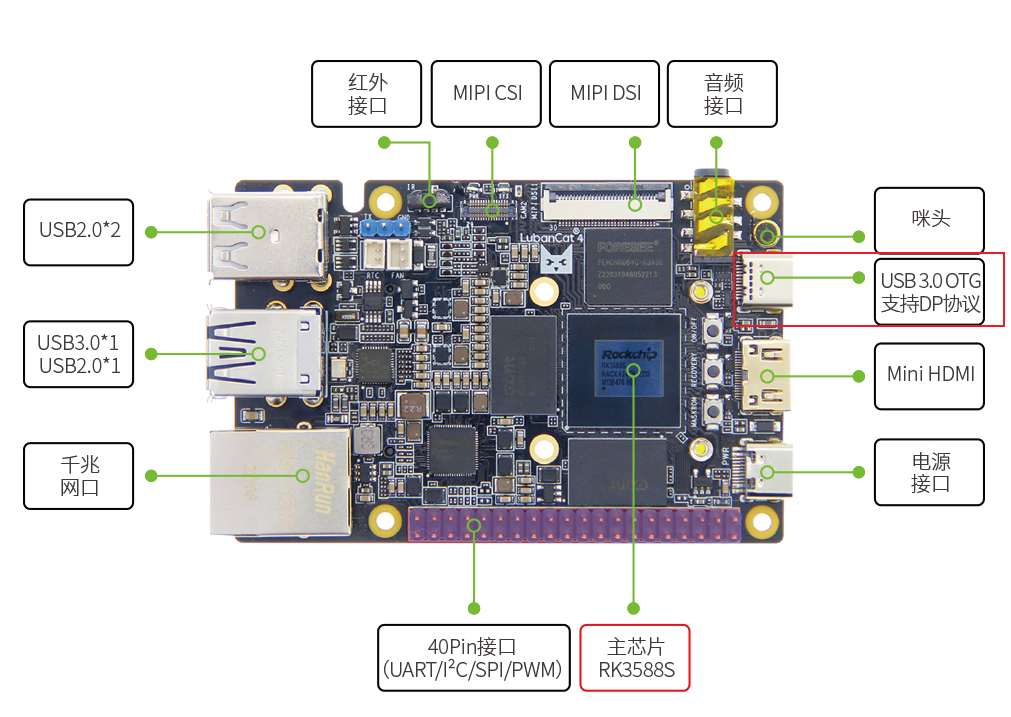

启动系统后,我们需要通过adb连接板卡,具体连接的usb接口请参考 快速使用手册 的硬件说明。 下面以lubancat-4为例(鲁班猫RK系列其他板卡类似),我们使用usb-typec线连接板子usb3.0 OTG接口,另外一端连接到PC电脑:

然后在PC电脑(ubuntu系统)上使用adb连接设备:

root@YH-LONG:~# adb devices

* daemon not running; starting now at tcp:5037

* daemon started successfully

List of devices attached

7fbff6f219991594 device

5.3.1. rknn_yolov5_demo例程测试¶

1、拉取例程和获取编译器

# 获取rknpu2

git clone https://github.com/rockchip-linux/rknpu2.git

cd rknpu2-master/examples/rknn_yolov5_demo

# 最新的rknpu2仓库是https://github.com/airockchip/rknn-toolkit2/tree/master/rknpu2

# 或者从教程配套例程

#git clone https://gitee.com/LubanCat/lubancat_ai_manual_code.git

#cd dev_env/rknpu2/rknn_yolov5_demo

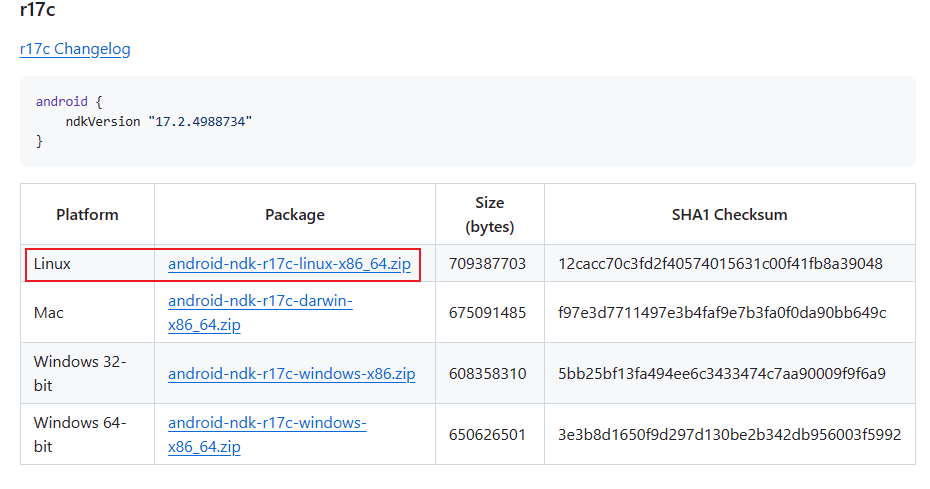

然后从 https://developer.android.google.cn/ndk/downloads?hl=zh-cn 获取NDK工具, 教程测试rknpu2建议使用的NDK版本(android-ndk-r17c,Linux x86 64位),NDK也可以从配套 网盘资源 (提取码hslu)获取, 最终获取到的zip文件解压到相关目录下。

2、编译rknn_yolov5_demo例程

# 切换到rknpu2工程的rknpu2-master/examples/rknn_yolov5_demo目录下

cd rknpu2-master/examples/rknn_yolov5_demo

# 或者是从教程配套例程

#cd dev_env/rknpu2/rknn_yolov5_demo

# 然后修改编译脚本build-android_RK3588.sh(测试的是安卓系统,lubancat-4)中的ANDROID_NDK_PATH路径,

# 具体根据前面获取的android-ndk-r26b存放路径

ANDROID_NDK_PATH=/mnt/d/rk/android-ndk-r17c

# 执行脚本,编译例程

./build-android_RK3588.sh

-- Check for working C compiler: /mnt/d/rk/android-ndk-r17c/toolchains/llvm/prebuilt/linux-x86_64/bin/clang

-- Check for working C compiler: /mnt/d/rk/android-ndk-r17c/toolchains/llvm/prebuilt/linux-x86_64/bin/clang -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /mnt/d/rk/android-ndk-r17c/toolchains/llvm/prebuilt/linux-x86_64/bin/clang++

-- Check for working CXX compiler: /mnt/d/rk/android-ndk-r17c/toolchains/llvm/prebuilt/linux-x86_64/bin/clang++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found OpenCV: /mnt/d/rk/rknpu2-1.5.2/examples/3rdparty/opencv/OpenCV-android-sdk (found version "3.4.5")

-- Configuring done

-- Generating done

-- Build files have been written to: /mnt/d/rk/rknpu2-1.5.2/examples/rknn_yolov5_demo/build/build_android_v8a

Scanning dependencies of target rknn_yolov5_video_demo

Scanning dependencies of target rknn_yolov5_demo

[ 11%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/src/main_video.cc.o

[ 22%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/src/postprocess.cc.o

[ 33%] Building CXX object CMakeFiles/rknn_yolov5_video_demo.dir/utils/mpp_decoder.cpp.o

# 省略...

[ 88%] Built target rknn_yolov5_video_demo

[100%] Linking CXX executable rknn_yolov5_demo

[100%] Built target rknn_yolov5_demo

[ 66%] Built target rknn_yolov5_video_demo

[100%] Built target rknn_yolov5_demo

Install the project...

# 省略...

# 编译出的程序会安装在当前目录下install中

3、传输程序到板卡

# 把前面编译好的例程传输到/data目录下

adb root

adb push install/rknn_yolov5_demo_Android /data

# 进入板卡系统终端,切换到例程目录下

adb shell

rk3588s_lubancat_4_hdmi:/# cd /data/rknn_yolov5_demo_Android

rk3588s_lubancat_4_hdmi:/data/rknn_yolov5_demo_Android#

# 然后设置库的搜索路径,使用我们传输进来的库

rk3588s_lubancat_4_hdmi:/data/rknn_yolov5_demo_Android# export LD_LIBRARY_PATH=./lib

# 为rknn_yolov5_demo添加可执行权限,然后执行命令,命令用法是:./rknn_yolov5_demo <rknn model> <jpg>

rk3588s_lubancat_4_hdmi:/data/rknn_yolov5_demo_Android# chmod +x rknn_yolov5_demo

rk3588s_lubancat_4_hdmi:/data/rknn_yolov5_demo_Android# ./rknn_yolov5_demo ./model/RK3588/yolov5s-640-640.rknn ./model/bus.jpg

model input num: 1, output num: 3

index=0, name=images, n_dims=4, dims=[1, 640, 640, 3], n_elems=1228800, size=1228800, w_stride = 640, size_with_stride=1228800, fmt=NHWC, type=INT8, qnt_type=AFFINE,

zp=-128, scale=0.003922

index=0, name=output, n_dims=4, dims=[1, 255, 80, 80], n_elems=1632000, size=1632000, w_stride = 0, size_with_stride=1638400, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp

=-128, scale=0.003860

index=1, name=283, n_dims=4, dims=[1, 255, 40, 40], n_elems=408000, size=408000, w_stride = 0, size_with_stride=491520, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=-128,

scale=0.003922

index=2, name=285, n_dims=4, dims=[1, 255, 20, 20], n_elems=102000, size=102000, w_stride = 0, size_with_stride=163840, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=-128,

scale=0.003915

model is NHWC input fmt

model input height=640, width=640, channel=3

once run use 31.285000 ms

loadLabelName ./model/coco_80_labels_list.txt

person @ (209 244 286 506) 0.884139

person @ (478 238 559 526) 0.867678

person @ (110 238 230 534) 0.824685

bus @ (94 129 553 468) 0.705055

person @ (79 354 122 516) 0.339254

loop count = 10 , average run 29.712800 ms

# 结果保存在out.jpg中,exit命令退出终端,然后使用adb命令传输到PC本地查看

adb pull /data/rknn_yolov5_demo_Android/out.jpg ./

/data/rknn_yolov5_demo_Android/out.jpg: 1 file pulled, 0 skipped. 25.3 MB/s (190629 bytes in 0.007s)

测试运行时指定了搜索库路径,如果使用系统默认安装的库,可能需要更新板端的runtime库(替换安卓系统的/vendor/lib64/librknnrt.so),以及rknn_server。 使转换出rknn模型的RKNN-Toolkit2版本和runtime库版本一致。