6. RKLLM¶

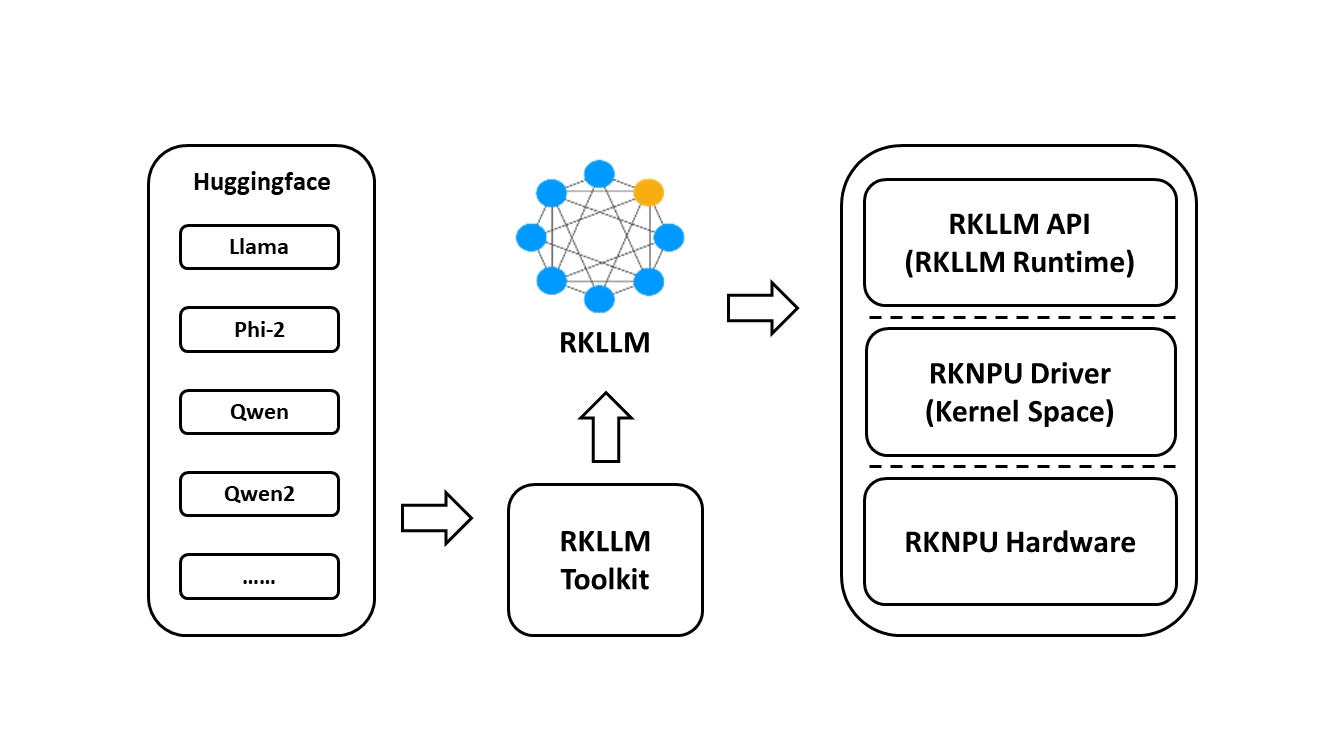

RKLLM 软件堆栈可以帮助用户快速将大语言模型部署到rk芯片上。

为了使用RKNPU,用户需要首先在计算机上安装RKLLM-Toolkit工具,将训练后的模型转换为RKLLM格式模型,然后在开发板上使用RKLLM C API进行推理。

RKLLM Toolkit是一个软件开发工具包,供用户在PC上进行模型转换和量化。 RKLLM Runtime为Rockchip NPU平台提供了C/C++编程接口,帮助用户部署RKLLM模型,加速LLM应用程序的实现。

RKLLM的整体开发步骤主要分为:模型转换和板端部署运行。

模型转换是使用RKLLM-Toolkit将预训练的大语言模型将会被转换为RKLLM格式。

板端部署运行是调用RKLLM Runtime库加载RKLLM模型到Rockchip NPU平台,然后进行推理等操作。

RKLLM支持的硬件平台是RK3562, RK3576,RK3588,请使用 LubanCat-3/4/5系列板卡 。

重要

请注意教程测试RKLLM-Toolkit是1.2.1版本,测试lubancat-4板卡,系统使用Ubuntu,教程测试相关文件从配套例程获取。

6.1. RKLLM-Toolkit¶

RKLLM-Toolkit 是为用户提供在计算机上进行大语言模型的量化、转换的开发套件。通过该 工具提供的接口可以便捷地完成模型转换和模型量化。

6.1.1. RKLLM-Toolkit安装¶

拉取RKLLM源码以及目录文件说明:

# 拉取源码

git clone https://github.com/airockchip/rknn-llm.git

# 目录说明

.

├── benchmark.md # 相关模型的测试性能

├── CHANGELOG.md # 更新日志

├── doc # RKLLM用户手册

├── examples # 模型转换示例

├── LICENSE

├── README.md

├── res

├── rkllm-runtime # 板端部署的库和例程

├── rkllm-toolkit # rkllm-toolkit包

├── rknpu-driver # RKNPU驱动

└── scripts # 固定cpu、ddr、npu频率的脚本

8 directories, 4 files

使用conda创建一个rkllm1.2.1环境(conda安装参考下前面章节):

# 创建RKLLM_Toolkit环境

conda create -n rkllm1.2.1 python=3.10

conda activate rkllm1.2.1

# 切换到前面拉取工程的rkllm-toolkit目录下

cd rknn-llm/rknn-toolkit/

# 安装rkllm_toolkit(文件请根据具体版本修改),会自动下载RKLLM-Toolkit工具所需要的相关依赖包。

(rkllm1.2.1) llh@llh:/xxx/rknn-llm/rkllm-toolkit$ pip3 install rkllm_toolkit-1.2.1-cp310-cp310-linux_x86_64.whl

简单检测下安装的RKLLM-Toolkit,正常是没有错误输出:

(rkllm1.2.1) llh@llh:/xxx$ python3

Python 3.10.18 (main, Jun 5 2025, 13:14:17) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from rkllm.api import RKLLM

>>>

6.1.2. RKLLM-Toolkit测试¶

RKLLM-Toolkit提供模型的转换、量化功能, 将Hugging Face格式或者GGUF格式的大语言模型转换为RKLLM模型,然后使用RKLLM Runtime的接口实现板端推理。

目前支持的模型有 LLAMA models, TinyLLAMA models, Qwen2/Qwen2.5/Qwen3, Phi2/Phi3, ChatGLM3-6B, Gemma2/Gemma3, InternLM2 models, MiniCPM3/MiniCPM4, TeleChat2, Qwen2-VL-2B-Instruct/Qwen2-VL-7B-Instruct/Qwen2.5-VL-3B-Instruct, MiniCPM-V-2_6,DeepSeek-R1-Distill,Janus-Pro-1B,InternVL2-1B,SmolVLM,RWKV7, 最新支持情况请查看 rknn-llm。

这里简单测试 Qwen-1_8B-Chat 模型, 测试直接使用RKLLM-Toolkit转换成rkllm模型, 也可以先使用AutoGPTQ量化模型后,再转换为rkllm模型,如果用户修改了模型结构,RKLLM-Toolkit还支持自定义模型转换, 具体请参考 rknn-llm文档 ;

# 示例是使用Qwen-1_8B模型,先下载模型(或者从网盘获取)

git lfs install

git clone https://huggingface.co/Qwen/Qwen-1_8B-Chat

# 或者

git clone https://hf-mirror.com/Qwen/Qwen-1_8B-Chat

# 也可以从modelscope获取

git clone https://www.modelscope.cn/qwen/Qwen-1_8B-Chat.git

模型导出程序export_rkllm.py如下 (参考 配套例程 ):

from rkllm.api import RKLLM

import argparse

if __name__ == "__main__":

argparse = argparse.ArgumentParser()

argparse.add_argument('--modelpath', type=str, default='Qwen-1_8B-Chat', help='model path', required=True)

argparse.add_argument('--target-platform', type=str, default='rk3588', help='target platform', required=False)

argparse.add_argument("--dataset_path", type=str, help="calibration data path")

argparse.add_argument('--num_npu_core', type=int, default=3, help='npu core num(rk3588:0-3, rk3576:0-2)', required=False)

argparse.add_argument('--optimization_level', type=int, default=1, help='optimization_level(0 or 1)', required=False)

argparse.add_argument('--quantized_dtype', type=str, default='w8a8', help='quantized dtype(rk3588:w8a8/w8a8_g128/w8a8_g256/w8a8_g512....)', required=False)

argparse.add_argument('--quantized_algorithm', type=str, default='normal', help='quantized algorithm(normal/grq/gdq)', required=False)

argparse.add_argument('--device', type=str, default='cpu', help='device(cpu/cuda)', required=False)

argparse.add_argument('--savepath', type=str, default='Qwen-1_8B-Chat.rkllm', help='save path', required=False)

args = argparse.parse_args()

qparams = None # Use extra_qparams

# init

llm = RKLLM()

# Load model

ret = llm.load_huggingface(model=args.modelpath, model_lora = None, device=args.device, dtype="float32", custom_config=None, load_weight=True)

# ret = llm.load_gguf(model = modelpath)

if ret != 0:

print('Load model failed!')

exit(ret)

# Build model

ret = llm.build(do_quantization=True, optimization_level=args.optimization_level, quantized_dtype=args.quantized_dtype,

quantized_algorithm=args.quantized_algorithm, target_platform=args.target_platform,

num_npu_core=args.num_npu_core, extra_qparams=qparams, dataset=args.dataset_path)

if ret != 0:

print('Build model failed!')

exit(ret)

# Export rkllm model

ret = llm.export_rkllm(savepath)

if ret != 0:

print('Export model failed!')

exit(ret)

1、调用RKLLM-Toolkit提供的接口,初始化RKLLM对象,然后调用rkllm.load_huggingface()函数加载模型;

2、通过rkllm.build()函数对RKLLM模型的构建,需要设置参数do_quantization是否量化, 设置目标平台等等,详细参数请参考 rknn-llm文档 ;

3、最后通过rkllm.export_rkllm()函数将模型导出为RKLLM模型文件。

在运行export_rkllm.py程序之前,可以先根据模型特点与使用场景准备生成量化的校准样本, 运行程序generate_quant_data.py生成量化校正数据集,该数据集用于后面模型量化,如果没有相关数据,可以不设置rkllm.build()的dataset参数。

运行export_rkllm.py,转换模型为RKLLM模型:

# 查看程序参数 python3 export_rkllm.py -h

# 更多参数设置可自行修改程序,参数具体请查看Rockchip_RKLLM_SDK_CN_xxx.pdf文档

options:

-h, --help show this help message and exit

--modelpath MODELPATH

model path

--target-platform TARGET_PLATFORM

target platform

--dataset_path DATASET_PATH

calibration data path

--num_npu_core NUM_NPU_CORE

npu core num(rk3588:0-3, rk3576:0-2)

--optimization_level OPTIMIZATION_LEVEL

optimization_level(0 or 1)

--quantized_dtype QUANTIZED_DTYPE

quantized dtype(rk3588:w8a8/w8a8_g128/w8a8_g256/w8a8_g512...)

--quantized_algorithm QUANTIZED_ALGORITHM

quantized algorithm(normal/grq/gdq)

--device DEVICE device(cpu/cuda)

--savepath SAVEPATH save path

# 例程测试lubancat-4,指定模型路径,设置目标平台rk3588,模型推理使用npu核心数为3等等

(rkllm1.2.1) llh@anhao:/xxx$ python3 export_rkllm.py --modelpath ./Qwen-1_8B-Chat \

--target-platform rk3588 --device cuda --num_npu_core 3

INFO: rkllm-toolkit version: 1.2.1

# 省略.............

Loading checkpoint shards: 100%|████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 18.29it/s]

Building model: 100%|███████████████████████████████████████████████████████████████████████████████| 272/272 [00:13<00:00, 20.90it/s]

Optimizing model: 100%|█████████████████████████████████████████████████████████████████████████████| 24/24 [00:04<00:00, 5.15it/s]

INFO: Setting token_id of bos to 151643

INFO: Setting token_id of eos to 151643

INFO: Setting token_id of unk to 151643

Converting model: 100%|████████████████████████████████████████████| 195/195 [00:00<00:00, 3109845.17it/s]

INFO: Setting max_context_limit to 4096

INFO: Exporting the model, please wait ....

[=================================================>] 369/369 (100%)

INFO: Model has been saved to Qwen-1_8B-Chat.rkllm!

RKLLM模型转换成功后,会在当前目录生成qwen.rkllm文件,然后将该文件到板卡系统中(用于后面部署测试)。

教程测试的Qwen-1_8B-Chat.rkllm可以从 网盘资料 获取(提取码:hslu), 在鲁班猫->1-野火开源图书_教程文档->AI教程相关源文件->rkllm目录下。

6.2. RKLLM Runtime¶

RKLLM Runtime库是RKLLM-Toolkit的运行时库,主要提供加载RKLLM模型、进行推理等操作。

6.2.1. 内核驱动更新¶

RKLLM部署需要较高NPU内核版本较高,用户手册说明需要v0.9.8版本以上, 鲁班猫板卡烧录网盘最新的镜像(20240620日期之后的)即可。

# 板端执行以下命令,查询NPU内核版本

cat /sys/kernel/debug/rknpu/version

如果烧录旧版本镜像,可以选择更新内核deb包或者自行编译内核deb安装。

# 下面是更新内核参考命令,教程更加建议直接烧录最新镜像

sudo apt update

sudo apt install linux-image-5.10.160

6.2.2. RKLLM模型推理示例¶

在板卡上获取RKLLM文件,或者复制前面获取的文件复制到板卡上,教程测试是直接到板卡上编译, 如果是交叉编译请获取下 交叉编译器 , 然后设置交叉编译器路径,其他类似。

# 拉取配套例程源码(例程可能没有及时更新)

git clone https://gitee.com/LubanCat/lubancat_ai_manual_code.git

# 切换到示例程序的deploy目录下

cd lubancat_ai_manual_code/dev_env/rkllm/Qwen-1_8B-Chat-Demo/deploy

部署例程如下(部分):

// 省略..........................

//#define SYSTEM_PROMPT "<|im_start|>system \nYou are a helpful assistant. <|im_end|>"

#define SYSTEM_PROMPT "<|im_start|>system \nYou are a translator. <|im_end|>"

#define PROMPT_PREFIX "<|im_start|>user \n"

#define PROMPT_POSTFIX "<|im_end|><|im_start|>assistant"

// 省略..........................

int main(int argc, char **argv)

{

if (argc < 4) {

std::cerr << "Usage: " << argv[0] << " model_path max_new_tokens max_context_len\n";

return 1;

}

signal(SIGINT, exit_handler);

printf("rkllm init start\n");

//设置参数及初始化,设置采样参数

RKLLMParam param = rkllm_createDefaultParam();

param.model_path = argv[1];

param.top_k = 1;

param.top_p = 0.95;

param.temperature = 0.8;

param.repeat_penalty = 1.1;

param.frequency_penalty = 0.0;

param.presence_penalty = 0.0;

param.max_new_tokens = std::atoi(argv[2]);

param.max_context_len = std::atoi(argv[3]);

param.skip_special_token = true;

param.extend_param.base_domain_id = 0;

param.extend_param.embed_flash = 1;

//初始化 RKLLM 模型

int ret = rkllm_init(&llmHandle, ¶m, callback);

if (ret == 0){

printf("rkllm init success\n");

} else {

printf("rkllm init failed\n");

exit_handler(-1);

}

vector<string> pre_input;

pre_input.push_back("把这句话翻译成英文: 现有一笼子,里面有鸡和兔子若干只,数一数,共有头14个,腿38条,求鸡和兔子各有多少只?");

pre_input.push_back("把这句话翻译成中文: Knowledge can be acquired from many sources. These include books, teachers and practical experience,

and each has its own advantages.

The knowledge we gain from books and formal education enables us to learn about things that we have no opportunity to experience in daily life.

We can also develop our analytical skills and learn how to view and interpret the world around us in different ways.");

cout << "\n**********************可输入以下问题对应序号获取回答/或自定义输入********************\n"

<< endl;

for (int i = 0; i < (int)pre_input.size(); i++)

{

cout << "[" << i << "] " << pre_input[i] << endl;

}

cout << "\n*************************************************************************\n"

<< endl;

RKLLMInput rkllm_input;

memset(&rkllm_input, 0, sizeof(RKLLMInput)); // 将所有内容初始化为 0

// 初始化infer参数结构体

RKLLMInferParam rkllm_infer_params;

memset(&rkllm_infer_params, 0, sizeof(RKLLMInferParam)); // 将所有内容初始化为 0

rkllm_infer_params.mode = RKLLM_INFER_GENERATE;

// By default, the chat operates in single-turn mode (no context retention)

// 0 means no history is retained, each query is independent

rkllm_infer_params.keep_history = 0;

//The model has a built-in chat template by default, which defines how prompts are formatted

//for conversation. Users can modify this template using this function to customize the

//system prompt, prefix, and postfix according to their needs.

rkllm_set_chat_template(llmHandle, SYSTEM_PROMPT, PROMPT_PREFIX, PROMPT_POSTFIX);

while (true)

{

std::string input_str;

printf("\n");

printf("user: ");

std::getline(std::cin, input_str);

if (input_str == "exit")

{

break;

}

if (input_str == "clear")

{

ret = rkllm_clear_kv_cache(llmHandle, 1, nullptr, nullptr);

if (ret != 0)

{

printf("clear kv cache failed!\n");

}

continue;

}

for (int i = 0; i < (int)pre_input.size(); i++)

{

if (input_str == to_string(i))

{

input_str = pre_input[i];

cout << input_str << endl;

}

}

rkllm_input.input_type = RKLLM_INPUT_PROMPT;

rkllm_input.role = "user";

rkllm_input.prompt_input = (char *)input_str.c_str();

printf("robot: ");

// 若要使用普通推理功能,则配置rkllm_infer_mode为RKLLM_INFER_GENERATE或不配置参数

rkllm_run(llmHandle, &rkllm_input, &rkllm_infer_params, NULL);

}

//

rkllm_destroy(llmHandle);

return 0;

}

RKLLM板端推理整体调用流程:

1、定义回调函数callback();

2、定义RKLLM模型参数结构体RKLLMParam,设置采样参数,推理参数,聊天模板等等,详细参数请解释参考 rknn-llm文档 ;

3、rkllm_init()初始化RKLLM模型;

4、rkllm_run()进行模型推理,通过回调函数callback()对模型实时传回的推理结果进行处理;

5、最后调用rkllm_destroy()释放RKLLM模型和资源。

如果不是本地编译,需要修改Qwen-1_8B-Chat_Demo例程中build-linux.sh文件的编译器路径 GCC_COMPILER_PATH=aarch64-linux-gnu ,指定交叉编译器的路径。

然后执行build-linux.sh编译例程:

cat@lubancat:~$ sudo apt update

cat@lubancat:~$ sudo apt install gcc g++ cmake

# 板卡上本地编译

cat@lubancat:~/xxx/deploy$ chmod +x build-linux.sh

cat@lubancat:~/xxx/deploy$ ./build-linux.sh

-- The C compiler identification is GNU 13.3.0

-- The CXX compiler identification is GNU 13.3.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/aarch64-linux-gnu-gcc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/aarch64-linux-gnu-g++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done (0.9s)

-- Generating done (0.0s)

-- Build files have been written to: /home/cat/xxx/deploy/build/build_linux_aarch64_Release

[ 50%] Building CXX object CMakeFiles/llm_demo.dir/src/llm_demo.cpp.o

[100%] Linking CXX executable llm_demo

[100%] Built target llm_demo

[100%] Built target llm_demo

Install the project...

-- Install configuration: "Release"

-- Installing: /home/cat/xxx/deploy/install/demo_Linux_aarch64/./llm_demo

-- Set non-toolchain portion of runtime path of "/home/cat/xxx/deploy/install/demo_Linux_aarch64/./llm_demo" to ""

-- Installing: /home/cat/xxx/deploy/install/demo_Linux_aarch64/lib/librkllmrt.so

编译完成后,会在当前目录下的install/build_linux_aarch64_Release输出可执行文件llm_demo。 执行RKLLM模型推理:

# 切换到install/build_linux_aarch64_Release目录下

cd install/build_linux_aarch64_Release/

# 临时设置rkllm runtime库路径,也可以将librkllmrt.so文件复制到系统库/usr/lib下

export LD_LIBRARY_PATH=./lib:$LD_LIBRARY_PATH

# 将前面RKLLM-Toolkit测试小节转换出的rkllm模型复制到板卡,设置模型路径,执行rkllm推理

# Usage: ./llm_demo model_path max_new_tokens max_context_len

./llm_demo ~/Qwen-1_8B-Chat-q.rkllm 256 256

# 如果需要查看性能

export RKLLM_LOG_LEVEL=1

测试输出:

# 测试lubancat-4

# Usage: ./llm_demo model_path max_new_tokens max_context_len

cat@lubancat:/xxx/install/build_linux_aarch64_Release$ export RKLLM_LOG_LEVEL=1

cat@lubancat:~/xxx/install/demo_Linux_aarch64$ ./llm_demo ~/Qwen-1_8B-Chat-q.rkllm 256 256

rkllm init start

I rkllm: rkllm-runtime version: 1.2.1, rknpu driver version: 0.9.8, platform: RK3588

I rkllm: loading rkllm model from /home/cat/Qwen-1_8B-Chat-q.rkllm

I rkllm: rkllm-toolkit version: 1.2.1, max_context_limit: 4096, npu_core_num: 3, target_platform: RK3588, model_dtype: W8A8

I rkllm: Enabled cpus: [4, 5, 6, 7]

I rkllm: Enabled cpus num: 4

rkllm init success

**********************可输入以下问题对应序号获取回答/或自定义输入********************

[0] 把这句话翻译成英文: 现有一笼子,里面有鸡和兔子若干只,数一数,共有头14个,腿38条,求鸡和兔子各有多少只?

[1] 把这句话翻译成中文: Knowledge can be acquired from many sources. These include books, teachers and practical experience,

and each has its own advantages. The knowledge we gain from books and formal education enables us to learn about things

that we have no opportunity to experience in daily life. We can also develop our analytical skills and learn how to

view and interpret the world around us in different ways.

*************************************************************************

I rkllm: reset chat template:

I rkllm: system_prompt: <|im_start|>system \nYou are a translator. <|im_end|>

I rkllm: prompt_prefix: <|im_start|>user \n

I rkllm: prompt_postfix: <|im_end|><|im_start|>assistant

W rkllm: Calling rkllm_set_chat_template will disable the internal automatic chat template parsing, including enable_thinking.

Make sure your custom prompt is complete and valid.

user: 1

把这句话翻译成中文: Knowledge can be acquired from many sources. These include books, teachers and practical experience, and each has

its own advantages. The knowledge we gain from books and formal education enables us to learn about things that we have no opportunity

to experience in daily life. We can also develop our analytical skills and learn how to view and interpret the world around us in different ways.

robot: 知识可以从许多来源获得。这些包括书籍、教师和实践经验,每种都有其自身的优点。从书籍和正规教育中获取的知识使我们能够学习在日常生活中无法体验的事情。

我们还可以培养我们的分析技能,并学会如何以不同的方式看待和解释我们周围的世界。

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Model init time (ms) 1510.23

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Stage Total Time (ms) Tokens Time per Token (ms) Tokens per Second

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Prefill 277.18 93 2.98 335.53

I rkllm: Generate 4057.52 59 68.77 14.54

I rkllm: --------------------------------------------------------------------------------------

I rkllm: Peak Memory Usage (GB)

I rkllm: 1.63

I rkllm: --------------------------------------------------------------------------------------

user: