人脸识别¶

本节例程的位置在 百度云盘资料\野火K210 AI视觉相机\1-教程文档_例程源码\例程\10-KPU\face_recognization\face_recog.py

例程¶

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 | # NOTE: make sure the gc heap memory is large enough to run this demo , The recommended size is 1M

import sensor, image, time, lcd

from maix import KPU

import gc

from maix import GPIO, utils

from fpioa_manager import fm

from board import board_info

fm.register(board_info.BOOT_KEY, fm.fpioa.GPIOHS0)

key_gpio = GPIO(GPIO.GPIOHS0, GPIO.IN)

lcd.init()

sensor.reset(dual_buff=True) # Reset and initialize the sensor. It will

# run automatically, call sensor.run(0) to stop

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 1000) # Wait for settings take effect.

# sensor.set_hmirror(0)

# sensor.set_vflip(1)

clock = time.clock() # Create a clock object to track the FPS.

feature_img = image.Image(size=(64,64), copy_to_fb=False)

feature_img.pix_to_ai()

FACE_PIC_SIZE = 64

dst_point =[(int(38.2946 * FACE_PIC_SIZE / 112), int(51.6963 * FACE_PIC_SIZE / 112)),

(int(73.5318 * FACE_PIC_SIZE / 112), int(51.5014 * FACE_PIC_SIZE / 112)),

(int(56.0252 * FACE_PIC_SIZE / 112), int(71.7366 * FACE_PIC_SIZE / 112)),

(int(41.5493 * FACE_PIC_SIZE / 112), int(92.3655 * FACE_PIC_SIZE / 112)),

(int(70.7299 * FACE_PIC_SIZE / 112), int(92.2041 * FACE_PIC_SIZE / 112)) ]

anchor = (0.1075, 0.126875, 0.126875, 0.175, 0.1465625, 0.2246875, 0.1953125, 0.25375, 0.2440625, 0.351875, 0.341875, 0.4721875, 0.5078125, 0.6696875, 0.8984375, 1.099687, 2.129062, 2.425937)

kpu = KPU()

kpu.load_kmodel("/sd/KPU/yolo_face_detect/face_detect_320x240.kmodel")

kpu.init_yolo2(anchor, anchor_num=9, img_w=320, img_h=240, net_w=320 , net_h=240 ,layer_w=10 ,layer_h=8, threshold=0.5, nms_value=0.2, classes=1)

ld5_kpu = KPU()

print("ready load model")

ld5_kpu.load_kmodel("/sd/KPU/face_recognization/ld5.kmodel")

fea_kpu = KPU()

print("ready load model")

fea_kpu.load_kmodel("/sd/KPU/face_recognization/feature_extraction.kmodel")

start_processing = False

BOUNCE_PROTECTION = 50

def set_key_state(*_):

global start_processing

start_processing = True

time.sleep_ms(BOUNCE_PROTECTION)

key_gpio.irq(set_key_state, GPIO.IRQ_RISING, GPIO.WAKEUP_NOT_SUPPORT)

record_ftrs = []

THRESHOLD = 80.5

RATIO = 0

def extend_box(x, y, w, h, scale):

x1_t = x - scale*w

x2_t = x + w + scale*w

y1_t = y - scale*h

y2_t = y + h + scale*h

x1 = int(x1_t) if x1_t>1 else 1

x2 = int(x2_t) if x2_t<320 else 319

y1 = int(y1_t) if y1_t>1 else 1

y2 = int(y2_t) if y2_t<240 else 239

cut_img_w = x2-x1+1

cut_img_h = y2-y1+1

return x1, y1, cut_img_w, cut_img_h

recog_flag = False

while 1:

gc.collect()

#print("mem free:",gc.mem_free())

#print("heap free:",utils.heap_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

kpu.run_with_output(img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0:

for l in dect :

x1, y1, cut_img_w, cut_img_h= extend_box(l[0], l[1], l[2], l[3], scale=RATIO)

face_cut = img.cut(x1, y1, cut_img_w, cut_img_h)

#a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 255, 255))

# img.draw_image(face_cut, 0,0)

face_cut_128 = face_cut.resize(128, 128)

face_cut_128.pix_to_ai()

out = ld5_kpu.run_with_output(face_cut_128, getlist=True)

face_key_point = []

for j in range(5):

x = int(KPU.sigmoid(out[2 * j])*cut_img_w + x1)

y = int(KPU.sigmoid(out[2 * j + 1])*cut_img_h + y1)

# a = img.draw_cross(x, y, size=5, color=(0, 0, 255))

face_key_point.append((x,y))

T = image.get_affine_transform(face_key_point, dst_point)

a = image.warp_affine_ai(img, feature_img, T)

# feature_img.ai_to_pix()

# img.draw_image(feature_img, 0,0)

feature = fea_kpu.run_with_output(feature_img, get_feature = True)

del face_key_point

scores = []

for j in range(len(record_ftrs)):

score = kpu.feature_compare(record_ftrs[j], feature)

scores.append(score)

if len(scores):

max_score = max(scores)

index = scores.index(max_score)

if max_score > THRESHOLD:

a=img.draw_string(0, 195, "persion:%d,score:%2.1f" %(index, max_score), color=(0, 255, 0), scale=2)

recog_flag = True

else:

a=img.draw_string(0, 195, "unregistered,score:%2.1f" %(max_score), color=(255, 0, 0), scale=2)

del scores

if start_processing:

record_ftrs.append(feature)

print("record_ftrs:%d" % len(record_ftrs))

start_processing = False

if recog_flag:

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

recog_flag = False

else:

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 255, 255))

del (face_cut_128)

del (face_cut)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 255), scale=2.0)

a = img.draw_string(0, 215, "press boot key to regist face", color=(255, 100, 0), scale=2.0)

lcd.display(img)

kpu.deinit()

ld5_kpu.deinit()

fea_kpu.deinit()

|

例程解析¶

1 2 3 4 5 6 7 8 | # NOTE: make sure the gc heap memory is large enough to run this demo , The recommended size is 1M

import sensor, image, time, lcd

from maix import KPU

import gc

from maix import GPIO, utils

from fpioa_manager import fm

from board import board_info

|

导入必要的模块:sensor, image, time, lcd, KPU, fpioa_manager, board_info 和 gc 。这些模块分别用于控制摄像头、处理图像、时间管理、LCD显示、KPU加速和垃圾回收。

1 2 | fm.register(board_info.BOOT_KEY, fm.fpioa.GPIOHS0)

key_gpio = GPIO(GPIO.GPIOHS0, GPIO.IN)

|

使用fpioa_manager模块将BOOT_KEY映射到GPIOHS0。

创建一个GPIO对象key_gpio,并将GPIOHS0设置为输入,使用中断上升沿触发。

1 2 3 4 5 6 7 8 | lcd.init()

sensor.reset(dual_buff=True) # Reset and initialize the sensor. It will

# run automatically, call sensor.run(0) to stop

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 1000) # Wait for settings take effect.

# sensor.set_hmirror(0)

# sensor.set_vflip(1)

|

初始化LCD显示。

重置摄像头,设置图像格式为RGB565,分辨率设为QVGA(320x240),并跳过一些帧以等待设置生效。

1 2 3 4 5 6 7 8 9 10 11 | clock = time.clock() # Create a clock object to track the FPS.

feature_img = image.Image(size=(64,64), copy_to_fb=False)

feature_img.pix_to_ai()

FACE_PIC_SIZE = 64

dst_point =[(int(38.2946 * FACE_PIC_SIZE / 112), int(51.6963 * FACE_PIC_SIZE / 112)),

(int(73.5318 * FACE_PIC_SIZE / 112), int(51.5014 * FACE_PIC_SIZE / 112)),

(int(56.0252 * FACE_PIC_SIZE / 112), int(71.7366 * FACE_PIC_SIZE / 112)),

(int(41.5493 * FACE_PIC_SIZE / 112), int(92.3655 * FACE_PIC_SIZE / 112)),

(int(70.7299 * FACE_PIC_SIZE / 112), int(92.2041 * FACE_PIC_SIZE / 112)) ]

|

创建一个时钟对象clock来跟踪帧率(FPS)。

创建一个图像对象feature_img,用于后续的AI处理。

定义一个标准人脸关键点位置的数组dst_point,这些关键点用于人脸对齐。

1 | anchor = (0.1075, 0.126875, 0.126875, 0.175, 0.1465625, 0.2246875, 0.1953125, 0.25375, 0.2440625, 0.351875, 0.341875, 0.4721875, 0.5078125, 0.6696875, 0.8984375, 1.099687, 2.129062, 2.425937)

|

定义一个锚点数组anchor,用于YOLO v2目标检测算法。

1 2 3 | kpu = KPU()

kpu.load_kmodel("/sd/KPU/yolo_face_detect/face_detect_320x240.kmodel")

kpu.init_yolo2(anchor, anchor_num=9, img_w=320, img_h=240, net_w=320 , net_h=240 ,layer_w=10 ,layer_h=8, threshold=0.5, nms_value=0.2, classes=1)

|

创建一个KPU对象kpu,并加载人脸检测模型face_detect_320x240.kmodel,然后初始化YOLO v2目标检测。

1 2 3 | ld5_kpu = KPU()

print("ready load model")

ld5_kpu.load_kmodel("/sd/KPU/face_recognization/ld5.kmodel")

|

创建一个KPU对象ld5_kpu,并加载人脸关键点定位模型ld5.kmodel。

1 2 3 | fea_kpu = KPU()

print("ready load model")

fea_kpu.load_kmodel("/sd/KPU/face_recognization/feature_extraction.kmodel")

|

创建一个KPU对象fea_kpu,并加载人脸特征提取模型feature_extraction.kmodel。

1 2 3 4 5 6 7 8 9 | start_processing = False

BOUNCE_PROTECTION = 50

def set_key_state(*_):

global start_processing

start_processing = True

time.sleep_ms(BOUNCE_PROTECTION)

key_gpio.irq(set_key_state, GPIO.IRQ_RISING, GPIO.WAKEUP_NOT_SUPPORT)

|

按键中断函数设置

1 2 | record_ftrs = []

THRESHOLD = 80.5

|

record_ftrs是一个列表,用于存储已注册的人脸特征。

THRESHOLD是一个浮点数,用于定义一个阈值,用于判断新检测到的人脸特征与已注册特征之间的相似度。当相似度超过这个阈值时,可以认为检测到的人脸是已注册的。

1 2 3 4 5 6 7 8 9 10 11 12 13 | RATIO = 0

def extend_box(x, y, w, h, scale):

x1_t = x - scale*w

x2_t = x + w + scale*w

y1_t = y - scale*h

y2_t = y + h + scale*h

x1 = int(x1_t) if x1_t>1 else 1

x2 = int(x2_t) if x2_t<320 else 319

y1 = int(y1_t) if y1_t>1 else 1

y2 = int(y2_t) if y2_t<240 else 239

cut_img_w = x2-x1+1

cut_img_h = y2-y1+1

return x1, y1, cut_img_w, cut_img_h

|

定义一个函数extend_box,用于扩展人脸框的大小

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 | recog_flag = False

while 1:

gc.collect()

#print("mem free:",gc.mem_free())

#print("heap free:",utils.heap_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

kpu.run_with_output(img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0:

for l in dect :

x1, y1, cut_img_w, cut_img_h= extend_box(l[0], l[1], l[2], l[3], scale=RATIO)

face_cut = img.cut(x1, y1, cut_img_w, cut_img_h)

#a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 255, 255))

# img.draw_image(face_cut, 0,0)

face_cut_128 = face_cut.resize(128, 128)

face_cut_128.pix_to_ai()

out = ld5_kpu.run_with_output(face_cut_128, getlist=True)

face_key_point = []

for j in range(5):

x = int(KPU.sigmoid(out[2 * j])*cut_img_w + x1)

y = int(KPU.sigmoid(out[2 * j + 1])*cut_img_h + y1)

# a = img.draw_cross(x, y, size=5, color=(0, 0, 255))

face_key_point.append((x,y))

T = image.get_affine_transform(face_key_point, dst_point)

a = image.warp_affine_ai(img, feature_img, T)

# feature_img.ai_to_pix()

# img.draw_image(feature_img, 0,0)

feature = fea_kpu.run_with_output(feature_img, get_feature = True)

del face_key_point

scores = []

for j in range(len(record_ftrs)):

score = kpu.feature_compare(record_ftrs[j], feature)

scores.append(score)

if len(scores):

max_score = max(scores)

index = scores.index(max_score)

if max_score > THRESHOLD:

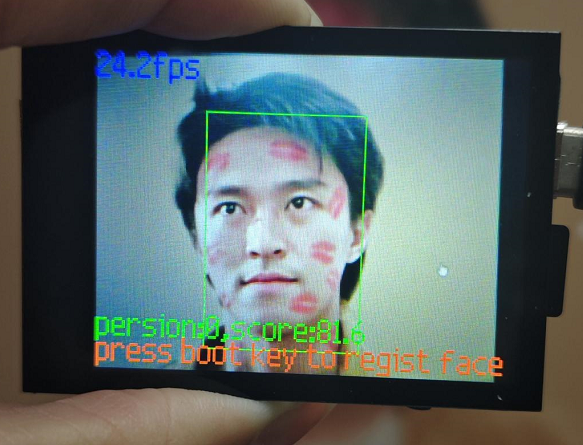

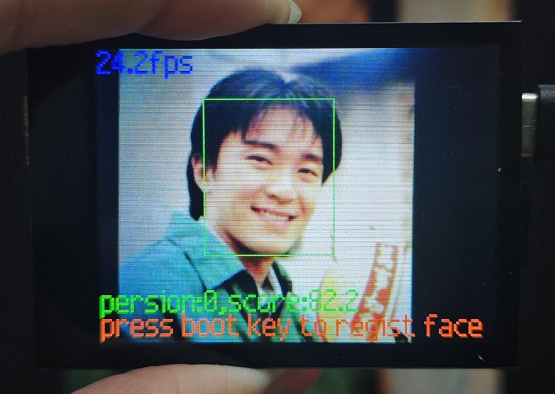

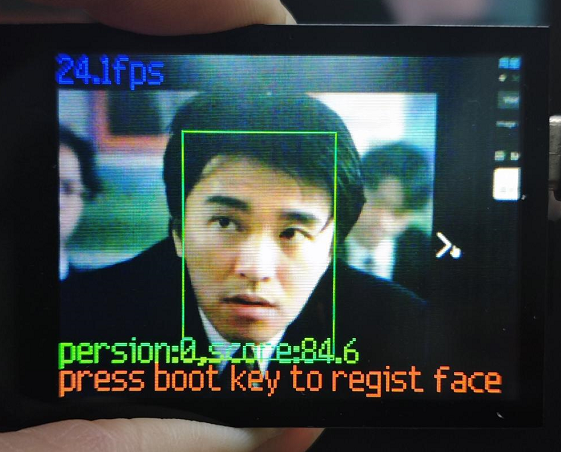

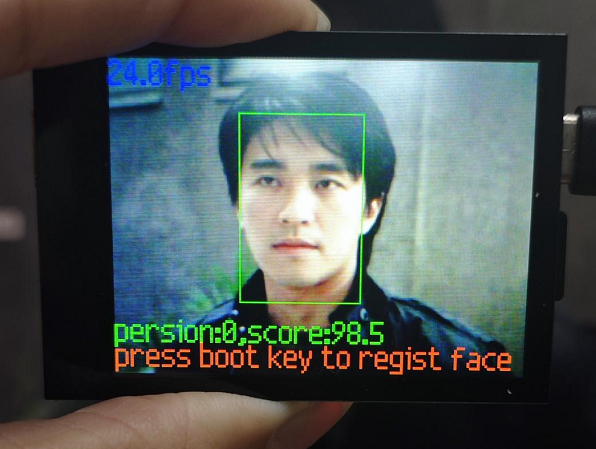

a=img.draw_string(0, 195, "persion:%d,score:%2.1f" %(index, max_score), color=(0, 255, 0), scale=2)

recog_flag = True

else:

a=img.draw_string(0, 195, "unregistered,score:%2.1f" %(max_score), color=(255, 0, 0), scale=2)

del scores

if start_processing:

record_ftrs.append(feature)

print("record_ftrs:%d" % len(record_ftrs))

start_processing = False

if recog_flag:

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

recog_flag = False

else:

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 255, 255))

del (face_cut_128)

del (face_cut)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 255), scale=2.0)

a = img.draw_string(0, 215, "press boot key to regist face", color=(255, 100, 0), scale=2.0)

lcd.display(img)

|

进入主循环,首先更新FPS时钟,捕获一帧图像,运行人脸检测模型,获取检测结果。

如果检测到人脸,对人脸进行框选,并裁剪出人脸图像。

对裁剪出的人脸图像进行关键点定位。

1 2 3 | kpu.deinit()

ld5_kpu.deinit()

fea_kpu.deinit()

|

循环持续运行,直到程序被停止。

在循环结束后,释放所有KPU资源。