人脸口罩检测¶

本节例程的位置在 百度云盘资料\野火K210 AI视觉相机\1-教程文档_例程源码\例程\10-KPU\face_mask_detect\face_mask_detect.py

例程¶

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | import sensor, image, time, lcd

from maix import KPU

import gc

lcd.init()

sensor.reset(dual_buff=True) # Reset and initialize the sensor. It will

# run automatically, call sensor.run(0) to stop

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 1000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

od_img = image.Image(size=(320,256), copy_to_fb=False)

anchor = (0.156250, 0.222548, 0.361328, 0.489583, 0.781250, 0.983133, 1.621094, 1.964286, 3.574219, 3.94000)

kpu = KPU()

print("ready load model")

kpu.load_kmodel("/sd/KPU/face_mask_detect/detect_5.kmodel")

kpu.init_yolo2(anchor, anchor_num=5, img_w=320, img_h=240, net_w=320 , net_h=256 ,layer_w=10 ,layer_h=8, threshold=0.7, nms_value=0.4, classes=2)

while True:

#print("mem free:",gc.mem_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

a = od_img.draw_image(img, 0,0)

od_img.pix_to_ai()

kpu.run_with_output(od_img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0:

print("dect:",dect)

for l in dect :

if l[4] :

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

a = img.draw_string(l[0],l[1]-24, "with mask", color=(0, 255, 0), scale=2)

else:

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 0, 0))

a = img.draw_string(l[0],l[1]-24, "without mask", color=(255, 0, 0), scale=2)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 128), scale=2.0)

lcd.display(img)

gc.collect()

kpu.deinit()

|

例程解析¶

1 2 3 | import sensor, image, time, lcd

from maix import KPU

import gc

|

导入必要的模块:sensor, image, time, lcd, KPU, 和 gc。这些模块分别用于控制摄像头、处理图像、时间管理、LCD显示、KPU加速和垃圾回收。

1 2 3 4 5 6 | lcd.init()

sensor.reset(dual_buff=True) # Reset and initialize the sensor. It will

# run automatically, call sensor.run(0) to stop

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 1000) # Wait for settings take effect.

|

初始化LCD显示和摄像头,设置摄像头图像格式为RGB565,分辨率设为QVGA(320x240),跳过一些帧以等待设置生效。

1 | clock = time.clock() # Create a clock object to track the FPS.

|

定义一个时钟对象clock来跟踪帧率(FPS)。

1 | od_img = image.Image(size=(320,256), copy_to_fb=False)

|

创建一个图像对象od_img,用于后续的AI处理。

1 | anchor = (0.156250, 0.222548, 0.361328, 0.489583, 0.781250, 0.983133, 1.621094, 1.964286, 3.574219, 3.94000)

|

定义一个锚点数组anchor,用于YOLO v2目标检测算法。

1 2 3 4 | kpu = KPU()

print("ready load model")

kpu.load_kmodel("/sd/KPU/face_mask_detect/detect_5.kmodel")

kpu.init_yolo2(anchor, anchor_num=5, img_w=320, img_h=240, net_w=320 , net_h=256 ,layer_w=10 ,layer_h=8, threshold=0.7, nms_value=0.4, classes=2)

|

创建一个KPU对象kpu,并加载人脸口罩检测模型detect_5.kmodel,然后初始化YOLO v2目标检测。这个模型用于检测人脸是否佩戴了口罩,并且有两个类别:佩戴口罩和不佩戴口罩。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | while True:

#print("mem free:",gc.mem_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

a = od_img.draw_image(img, 0,0)

od_img.pix_to_ai()

kpu.run_with_output(od_img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0:

print("dect:",dect)

for l in dect :

if l[4] :

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

a = img.draw_string(l[0],l[1]-24, "with mask", color=(0, 255, 0), scale=2)

else:

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 0, 0))

a = img.draw_string(l[0],l[1]-24, "without mask", color=(255, 0, 0), scale=2)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 128), scale=2.0)

lcd.display(img)

gc.collect()

|

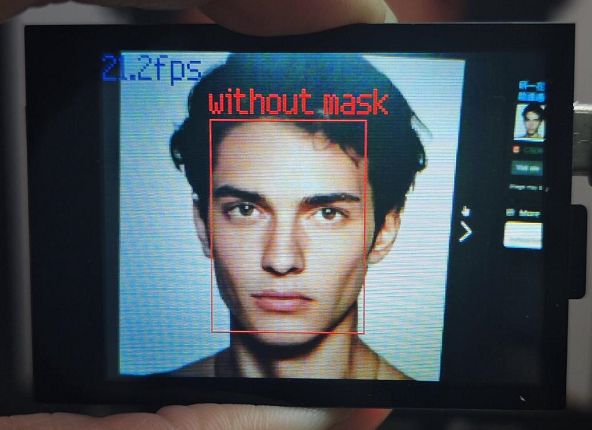

进入主循环,首先更新FPS时钟,捕获一帧图像,将图像复制到od_img中,并将其转换为AI格式。

运行人脸口罩检测模型,获取检测结果。

如果检测到人脸,根据检测结果在人脸周围绘制矩形框,并在矩形框上方显示佩戴或不佩戴口罩的文字。

显示FPS信息,并将处理后的图像显示在LCD上。

1 | kpu.deinit()

|

循环结束后,释放所有KPU资源。