6. YOLOv10¶

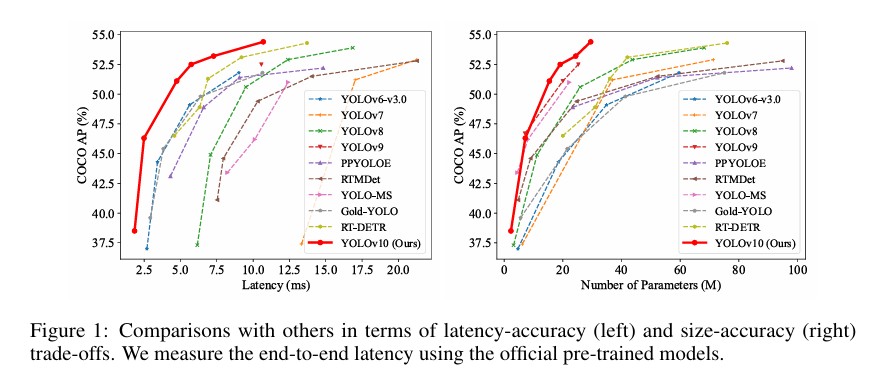

YOLOv10 是清华大学研究人员近期提出的一种实时目标检测方法,通过消除NMS、优化模型架构和引入创新模块等策略, 在保持高精度的同时显著降低了计算开销,为实时目标检测领域带来了新的突破。

YOLOv10 是在Ultralytics Python包的基础上进行了多项创新和改进,主要特点:

消除非极大值抑制(NMS):YOLOv10通过引入一致的双重分配策略,在训练时使用一对多的标签分配来提供丰富的监督信号,在推理时使用一对一的匹配,从而消除了对NMS的依赖。

全面优化的模型架构:YOLOv10从推理效率和准确性的角度出发,全面优化了模型的各个组成部分。这包括采用轻量级分类头、空间通道去耦下采样和等级引导块设计等,以减少计算冗余并提高模型性能。

引入大核卷积和部分自注意模块:为了提高性能,YOLOv10在不增加大量计算成本的前提下,引入了大核卷积和部分自注意模块。

YOLOv10论文地址:https://arxiv.org/pdf/2405.14458

YOLOv10项目地址:https://github.com/THU-MIG/yolov10

本章将简单测试YOLOv10,并导出rknn模型,在板卡上使用RKNN Toolkit Lite2部署测试。

6.1. YOLOv10简单测试¶

6.1.1. 环境配置¶

获取工程源码:

git clone https://github.com/THU-MIG/yolov10.git

使用Anaconda进行环境管理,创建一个yolov10环境:

conda create -n yolov10 python=3.9

conda activate yolov10

# 切换到yolov10工程目录,然后安装依赖

cd yolov10

pip install -r requirements.txt

pip install -e .

# 安装完成后简单测试yolo命令

(yolov10) xxx@anhao:~$ yolo -V

8.1.34

6.1.2. 模型推理测试¶

获取模型(这里获取s尺寸yolov10模型):

wget https://github.com/THU-MIG/yolov10/releases/download/v1.1/yolov10s.pt

然后使用命令测试:

(yolov10) xxx@anhao:~$ yolo predict model=./yolov10s.pt

WARNING ⚠️ 'source' argument is missing. Using default 'source=xxx'.

Ultralytics YOLOv8.1.34 🚀 Python-3.9.19 torch-2.0.1+cu117 CUDA:0 (NVIDIA GeForce RTX 3060 Laptop GPU, 6144MiB)

YOLOv10n summary (fused): 285 layers, 2762608 parameters, 63840 gradients, 8.6 GFLOPs

image 1/2 xxx/bus.jpg: 640x480 4 persons, 1 bus, 55.5ms

image 2/2 xxx/zidane.jpg: 384x640 2 persons, 60.5ms

Speed: 2.9ms preprocess, 58.0ms inference, 5.4ms postprocess per image at shape (1, 3, 384, 640)

Results saved to /xxx/yolov10/runs/detect/predict

💡 Learn more at https://docs.ultralytics.com/modes/predict

结果保存在yolov10工程文件runs/detect/predict目录下。

也可以测试yolov10工程文件中的app.py程序,一个网页显示,直接拖拽图像,然后推理显示结果。

6.2. 板卡上部署测试¶

为了在板卡上部署yolov10,参考 YOLOv8模型 的适配修改,修改下yolov10模型输出。

6.2.1. 导出适配的onnx模型¶

拉取修改的yolov10工程文件:

git clone https://github.com/mmontol/yolov10.git

修改ultralytics/cfg/default.yaml文件,设置模型路径为前面拉取的官方yolov10模型路径:

1 2 3 4 5 6 7 | task: detect # (str) YOLO task, i.e. detect, segment, classify, pose

mode: train # (str) YOLO mode, i.e. train, val, predict, export, track, benchmark

# Train settings -------------------------------------------------------------------------------------------------------

model: ../yolov10n.pt # (str, optional) path to model file, i.e. yolov8n.pt, yolov8n.yaml

data: # (str, optional) path to data file, i.e. coco128.yaml

epochs: 100 # (int) number of epochs to train for

|

然后使用命令导出onnx模型:

export PYTHONPATH=./

python ./ultralytics/engine/exporter.py

最后导出模型保存在原模型同级目录下。

6.2.2. 导出rknn模型¶

使用toolkit2工具,将前面的onnx模型转换成rknn模型, toolkit2的安装可以参考下 这里 。

编程一个简单的模型转换程序:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | # 省略.....

if __name__ == '__main__':

model_path, platform, do_quant, output_path = parse_arg()

# Create RKNN object

rknn = RKNN(verbose=False)

# Pre-process config

print('--> Config model')

rknn.config(mean_values=[[0, 0, 0]], std_values=[

[255, 255, 255]], target_platform=platform)

print('done')

# Load model

print('--> Loading model')

ret = rknn.load_onnx(model=model_path)

#ret = rknn.load_pytorch(model=model_path, input_size_list=[[1, 3, 640, 640]])

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=do_quant, dataset=DATASET_PATH)

if ret != 0:

print('Build model failed!')

exit(ret)

print('done')

# Export rknn model

print('--> Export rknn model')

ret = rknn.export_rknn(output_path)

if ret != 0:

print('Export rknn model failed!')

exit(ret)

print('done')

# Release

rknn.release()

|

使用toolkit2工具导出rknn模型:

(toolkit2_2.0) xxx@anhao:xxx$ python onnx2rknn.py ./yolov10n.onnx rk3588 fp

I rknn-toolkit2 version: 2.0.0b0+9bab5682

--> Config model

done

--> Loading model

I It is recommended onnx opset 19, but your onnx model opset is 13!

I Model converted from pytorch, 'opset_version' should be set 19 in torch.onnx.export for successful convert!

I Loading : 100%|██████████████████████████████████████████████| 182/182 [00:00<00:00, 67884.69it/s]

done

--> Building model

W build: The dataset='./coco_subset_20.txt' is ignored because do_quantization = False!

I rknn building ...

I rknn buiding done.

done

--> Export rknn model

done

提示

为避免其他问题,使用rknn-Toolkit2的版本和板卡推理使用的librknnrt.so库版本保持一致。

6.2.3. 部署测试¶

在鲁班猫RK系列板卡上部署,这里测试使用RKNN Toolkit Lite2提供的python接口,RKNN Toolkit Lite2安装请参考下前面教程。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | if __name__ == '__main__':

# Get device information

host_name = get_host()

if host_name == 'RK3566_RK3568':

rknn_model = RK3566_RK3568_RKNN_MODEL_PATH

elif host_name == 'RK3562':

rknn_model = RK3562_RKNN_MODEL_PATH

elif host_name == 'RK3576':

rknn_model = RK3576_RKNN_MODEL_PATH

elif host_name == 'RK3588':

rknn_model = RK3588_RKNN_MODEL_PATH

else:

print("This demo cannot run on the current platform: {}".format(host_name))

exit(-1)

rknn_lite = RKNNLite()

# 加载rknn模型

ret = rknn_lite.load_rknn(rknn_model)

if ret != 0:

print('Load RKNN model failed')

exit(ret)

# 设置运行环境,目标默认是rk3588,选择npu0单核

if host_name in ['RK3576', 'RK3588']:

ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_0)

else:

ret = rknn_lite.init_runtime()

if ret != 0:

print('Init runtime environment failed')

exit(ret)

print('done')

# 输入图像

img_src = cv2.imread(IMG_PATH)

src_shape = img_src.shape[:2]

img, ratio, (dw, dh) = letter_box(img_src, IMG_SIZE)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = np.expand_dims(img, 0)

# 推理运行

print('--> Running model')

outputs = rknn_lite.inference(inputs=[img])

# 后处理

boxes, classes, scores = post_process(outputs)

img_p = img_src.copy()

if boxes is not None:

draw(img_p, get_real_box(src_shape, boxes, dw, dh, ratio), scores, classes)

cv2.imwrite("result.jpg", img_p)

|

修改程序中的模型路径和输入图像路径,然后运行程序,即可得到推理结果。

cat@lubancat:~/xxx$ python3 yolov10.py

I RKNN: [13:51:14.616] RKNN Runtime Information, librknnrt version: 2.0.0b0 (35a6907d79@2024-03-24T10:31:14)

I RKNN: [13:51:14.616] RKNN Driver Information, version: 0.9.2

I RKNN: [13:51:14.617] RKNN Model Information, version: 6, toolkit version: 2.0.0b0+9bab5682(compiler version: 2.0.0b0 (35a6907d79@2024-03-24T02:34:11)),

target: RKNPU v2, target platform: rk3588, framework name: ONNX, framework layout: NCHW, model inference type: static_shape

done

--> Running model

person @ (79 330 115 518) 0.313

bus @ (87 137 556 438) 0.936

person @ (210 240 284 512) 0.906

person @ (109 234 226 536) 0.897

person @ (477 233 560 520) 0.833

推理结果保存在当前目录下,名为result.jpg。