7. YOLOV8目标检测¶

Ultralytics YOLOv8 建立在以前YOLO版本的成功基础上, 引入了新的功能和改进,进一步提高了性能和灵活性。YOLOv8设计快速、准确且易于使用, 是目标检测和跟踪、实例分割、图像分类和姿态估计任务的绝佳选择。

7.1. 导出yolov8模型文件¶

在虚拟机或者其他环境下,使用conda创建一个yolov8环境:

# 使用conda创建虚拟环境

conda create -n yolov8 python=3.8

conda activate yolov8

# 安装pytorch等等

# 安装YOLOv8,直接使用命令安装

pip install ultralytics -i https://mirror.baidu.com/pypi/simple

# 或者通过拉取仓库然后安装

git clone https://github.com/ultralytics/ultralytics

cd ultralytics

pip install -e .

# 安装成功后,使用命令yolo简单看下版本

(yolov8) llh@anhao:/$ yolo version

8.2.45

在yolov8环境中,获取yolov8n其中文件,然后创建一个python文件,调整yolov8输出分支, 去掉forward函数的解码部分,并将三个不同的feature map的box以及cls分开,得到6个分支。

1 2 3 4 5 6 7 8 9 10 11 12 13 | from ultralytics import YOLO

import types

input_size = (640, 640)

def forward2(self, x):

x_reg = [self.cv2[i](x[i]) for i in range(self.nl)]

x_cls = [self.cv3[i](x[i]) for i in range(self.nl)]

return x_reg + x_cls

model_path = "./yolov8n.pt"

model = YOLO(model_path)

model.model.model[-1].forward = types.MethodType(forward2, model.model.model[-1])

model.export(format='onnx', opset=11, imgsz=input_size)

|

导出模型:

(yolov8) llh@anhao:~/work$ wget https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n.pt

(yolov8) llh@anhao:~/work$ python export.py

YOLOv8n summary (fused): 168 layers, 3151904 parameters, 0 gradients, 8.7 GFLOPs

PyTorch: starting from 'yolov8n.pt' with input shape (1, 3, 640, 640) BCHW and output shape(s)

((1, 64, 80, 80), (1, 64, 40, 40), (1, 64, 20, 20), (1, 80, 80, 80), (1, 80, 40, 40), (1, 80, 20, 20)) (6.2 MB)

ONNX: starting export with onnx 1.14.1 opset 11...

ONNX: export success ✅ 1.0s, saved as 'yolov8n.onnx' (12.1 MB)

Export complete (3.8s)

Results saved to /home/llh/work

Predict: yolo predict task=detect model=yolov8n.onnx imgsz=640

Validate: yolo val task=detect model=yolov8n.onnx imgsz=640 data=coco.yaml

Visualize: https://netron.app

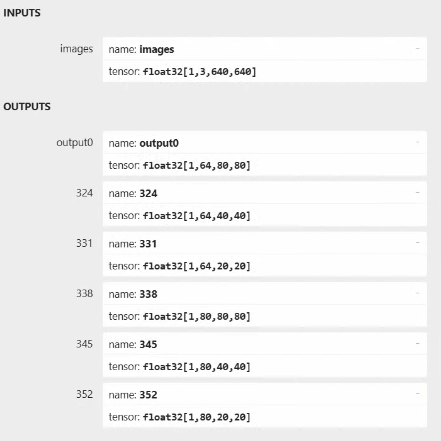

使用 netron 查看导出的yolov8n.onnx模型,可以看到如下模型输出:

有六个输出,依次是1*64*80*80、1*64*40*40、1*64*20*20、1*80*80*80、1*80*40*40、1*80*20*20。

7.2. 模型转换¶

7.2.1. ONNX模型转换成MLIR¶

TPU-MLIR的环境搭建参考 前面章节 。

创建工作目录,获取教程测试模型文件:

# 在工作目录下创建一个yolov8

root@cfa2f8976af9:/workspace# mkdir yolov8 && cd yolov8

root@cfa2f8976af9:/workspace/yolov8#

复制前面导出的yolov8n.onnx模型文件到工作目录,以及设置相关量化数据集等等:

# 拉取tpu-mlir源码,如果之前有拉取过,可以忽略

root@cfa2f8976af9:/workspace# git clone https://github.com/milkv-duo/tpu-mlir.git

root@cfa2f8976af9:/workspace# cd yolov8

root@cfa2f8976af9:/workspace/yolov8# cp -rf ../tpu-mlir/regression/dataset/COCO2017/ ./

使用model_transform(model_transform.py)工具将模型转成MLIR模型:

# model_def指定指定模型定义文件,input_shapes指定输入形状,

# mean和scale指定均值和归一化参数,pixel_format指定输入图像格式,

# test_input指定验证的图像,mlir指定输出mlir模型文件名称和路径。

root@cfa2f8976af9:/workspace/yolov8# model_transform.py \

--model_name yolov8n \

--model_def yolov8n.onnx \

--input_shapes [[1,3,640,640]] \

--keep_aspect_ratio \

--pixel_format "rgb" \

--mean 0,0,0 \

--scale 0.0039216,0.0039216,0.0039216 \

--test_input dog.jpg \

--test_result yolov8n_top_outputs.npz \

--mlir yolov8n.mlir

# 省略........................

[352_Conv ] CLOSE [PASSED]

(1, 80, 20, 20) float32

close order = 3

160 compared

160 passed

0 equal, 6 close, 154 similar

0 failed

0 not equal, 0 not similar

min_similiarity = (0.9999997615814209, 0.9999985514621537, 116.78445816040039)

Target yolov8n_top_outputs.npz

Reference yolov8n_ref_outputs.npz

npz compare PASSED.

compare 352_Conv: 100%|███████████████████████████████████████████████████████████████| 160/160 [00:06<00:00, 23.81it/s]

[Success]: npz_tool.py compare yolov8n_top_outputs.npz yolov8n_ref_outputs.npz --tolerance 0.99,0.99 --except - -vv

7.2.2. 量化模型¶

运行run_calibration(run_calibration.py)得到校准表,测试模型使用的是COCO数据集, 所以量化使用100张来自COCO2017的图片,执行命令(也可以使用前面章节的COCO数据集校准表):

root@cfa2f8976af9:/workspace/tpu/yolov8# run_calibration.py yolov8n.mlir \

--dataset ../tpu-mlir/regression/dataset/COCO2017 \

--input_num 100 \

-o yolov8n_cali_table

TPU-MLIR v1.8.1-20240712

GmemAllocator use FitFirstAssign

reused mem is 3276800, all mem is 40403200

2024/07/27 10:07:41 - INFO :

load_config Preprocess args :

resize_dims : [640, 640]

keep_aspect_ratio : True

keep_ratio_mode : letterbox

pad_value : 0

pad_type : center

input_dims : [640, 640]

--------------------------

mean : [0.0, 0.0, 0.0]

scale : [0.0039216, 0.0039216, 0.0039216]

--------------------------

pixel_format : rgb

channel_format : nchw

last input data (idx=100) not valid, droped

input_num = 100, ref = 100

real input_num = 100

activation_collect_and_calc_th for op: 352_Conv: 100%|████████████████████████████████| 161/161 [01:09<00:00, 2.32it/s]

[2048] threshold: 352_Conv: 100%|████████████████████████████████████████████████████| 161/161 [00:00<00:00, 605.45it/s]

[2048] threshold: 352_Conv: 100%|█████████████████████████████████████████████████████| 161/161 [00:01<00:00, 89.82it/s]

GmemAllocator use FitFirstAssign

reused mem is 3276800, all mem is 40403200

GmemAllocator use FitFirstAssign

reused mem is 3276800, all mem is 40403200

prepare data from 100

tune op: 352_Conv: 100%|██████████████████████████████████████████████████████████████| 161/161 [01:56<00:00, 1.38it/s]

auto tune end, run time:117.84105253219604

然后使用model_deploy(model_deploy.py)工具将MLIR模型转成INT8量化的 cvimodel模型:

root@cfa2f8976af9:/workspace/tpu/yolov8# model_deploy.py \

--mlir yolov8n.mlir \

--quantize INT8 \

--calibration_table yolov8n_cali_table \

--chip cv181x \

--test_input dog.jpg \

--test_reference yolov8n_top_outputs.npz \

--compare_all \

--tolerance 0.94,0.67 \

--fuse_preprocess \

--model yolov8n_int8_fuse_preprocess.cvimodel

# 省略..........................

[352_Conv ] EQUAL [PASSED]

(1, 80, 20, 20) float32

[352_Conv_f32 ] EQUAL [PASSED]

(1, 80, 20, 20) float32

16 compared

16 passed

16 equal, 0 close, 0 similar

0 failed

0 not equal, 0 not similar

min_similiarity = (1.0, 1.0, inf)

Target yolov8n_cv181x_int8_sym_model_outputs.npz

Reference yolov8n_cv181x_int8_sym_tpu_outputs.npz

npz compare PASSED.

compare 352_Conv_f32: 100%|█████████████████████████████████████████████████████████████| 16/16 [00:00<00:00, 25.93it/s]

[Success]: npz_tool.py compare yolov8n_cv181x_int8_sym_model_outputs.npz yolov8n_cv181x_int8_sym_tpu_outputs.npz

--tolerance 0.99,0.90 --except - -vv

# 运行完成后,会在当前目录下生成模型文件yolov8n_int8_fuse_preprocess.cvimodel,然后复制到板卡上

其中设置了参数fuse_preprocess,是将预处理融入到模型中,如果没有设置,在模型推理预处理阶段需要进行相应的量化等操作。

7.3. 部署测试¶

7.3.1. 部署推理和后处理¶

YOLOv8的部署推理过程和YOLOv5类似,差别主要在于前面需要对Distribution Focal Loss中的积分表示bbox形式进行解码, 变成常规的4维度bbox,后续推理过程就几乎一样。

7.3.2. 编译部署例程¶

执行下面命令设置交叉编译器(教程测试板卡默认是rsiv64系统), 根据部署的板卡系统选择交叉编译器,如果是arm64设置aarch64-linux-gnu,如果是riscv64设置riscv64-linux-musl-x86_64 。

# 获取交叉编译器(如果前面测试获取了交叉编译器,就不需要),教程测试rsiv64系统,并设置交叉编译器到环境变量

wget https://sophon-file.sophon.cn/sophon-prod-s3/drive/23/03/07/16/host-tools.tar.gz

cd /workspace/tpu-mlir

tar xvf host-tools.tar.gz

cd host-tools

export PATH=$PATH:$(pwd)/gcc/riscv64-linux-musl-x86_64/bin

# 如果是aarch64系统,设置交叉编译器到环境变量

#export PATH=$PATH:$(pwd)/gcc/gcc-linaro-6.3.1-2017.05-x86_64_aarch64-linux-gnu/bin

在虚拟机中使用交叉编译器编译例程:

# 获取配套例程文件并切换到yolov5目录下

git clone https://gitee.com/LubanCat/lubancat_sg2000_application_code.git

# 切换到yolv8目标检测目录

cd lubancat_sg2000_application_code/examples/yolov8

# 编译yolov5例程,请根据不同板卡系统设置-a参数,如果是arm64设置aarch64,如果是riscv64设置musl_riscv64

./build.sh -a musl_riscv64

-- CMAKE_C_COMPILER: riscv64-unknown-linux-musl-gcc

-- CMAKE_CXX_COMPILER: riscv64-unknown-linux-musl-g++

-- CMAKE_C_COMPILER: riscv64-unknown-linux-musl-gcc

-- CMAKE_CXX_COMPILER: riscv64-unknown-linux-musl-g++

-- The C compiler identification is GNU 10.2.0

-- The CXX compiler identification is GNU 10.2.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /home/dev/host-tools/gcc/riscv64-linux-musl-x86_64/bin/riscv64-unknown-linux-musl-gcc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /home/dev/host-tools/gcc/riscv64-linux-musl-x86_64/bin/riscv64-unknown-linux-musl-g++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done (3.5s)

-- Generating done (0.0s)

-- Build files have been written to: /home/dev/xxx/examples/yolov8/build/build_riscv_musl

[2/3] Install the project...

-- Install configuration: "RELEASE"

# 省略................................

在板卡上执行程序,推理测试:

# 如果是riscv系统需要设置下环境变量

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/lib64v0p7_xthead/lp64d

# 运行程序指定cvimodel模型路径,测试图片路径,输出结果图片路径

# ./yolov8 yolov8n_int8_fuse_preprocess.cvimodel cat.jpg out.jpg

root@lubancat:/home/cat# ./yolov8 ./yolov8n_int8_fuse_preprocess.cvimodel dog.jpg out_8.jpg

version: 1.4.0

yolov8n Build at 2024-07-27 10:14:10 For platform cv181x

Max SharedMem size:2278400

CVI_NN_RegisterModel succeeded

Input Tensor Number : 1

[0] images_raw, shape (1,3,640,640), count 1228800, fmt 7

Output Tensor Number : 6

[0] output0_Conv_f32, shape (1,64,80,80), count 409600, fmt 0

[1] 324_Conv_f32, shape (1,64,40,40), count 102400, fmt 0

[2] 331_Conv_f32, shape (1,64,20,20), count 25600, fmt 0

[3] 338_Conv_f32, shape (1,80,80,80), count 512000, fmt 0

[4] 345_Conv_f32, shape (1,80,40,40), count 128000, fmt 0

[5] 352_Conv_f32, shape (1,80,20,20), count 32000, fmt 0

CVI_NN_Forward using 49.419000 ms

CVI_NN_Forward Succeed...

-------------------

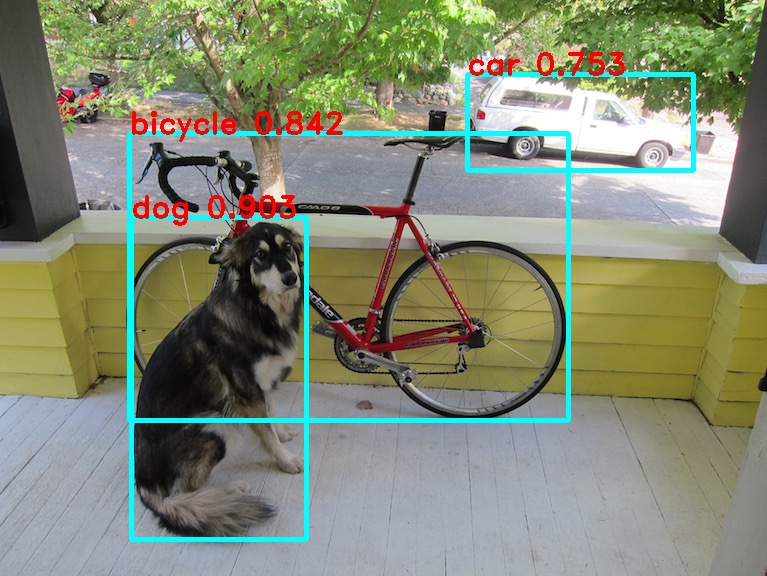

3 objects are detected

dog @ (131 217 306 539) 0.903

bicycle @ (129 133 568 420) 0.842

car @ (467 74 693 170) 0.753

-------------------

CVI_NN_CleanupModel succeeded

推理输出模型的输入输出Tensor信息,输出目标检测信息等等,结果图片保存在当前目录下out.jpg。